Do you know "Legandary Megadeth"'s song 'holy wars'?

Yes, Dave Mustaine was and always will be a prophet!

The words "Game STL" alone are enough to start an atomic war.

enjoy the carnage at: http://www.gamedev.net/community/forums/topic.asp?topic_id=518784

Tuesday, December 30, 2008

Friday, October 31, 2008

GI - Photon Mapping - Post 8 - Optimization Summary / Rant

In the last 3 days all the work has been on optimization, below is a summary of changes and the speedups they produces (speedup = old render time / new render time)

- Changing intersector from Trumbore/Moller to Optimized Wald Projection -> 0.25

- 2x2 Packet Tracing (SSE/SSE2 using SIMD intrinsics) -> 3.0-4.0

- Including ordering information into traversal (do not traverse BVH box if intersection was found in previous boxes and t intersection <> 1.8, 2x2-> 1.3

- SIMDifying primary ray packet generation (instead of looping to generate the 4 rays) -> 1.05

- Changing BVH traversal from recursive to iterative: Mono->1.04, 2x2->soon

- Changing BVH memory allocation scheme to improve traversal cache friendliness: soon

- Chancing BVH build algorithm (to SAH?): soon

I am also planning to have a look at gcc's profile guided optimization. Acovea seems to be a fun thing to try as well.

Talking about low level optimization, I recently read Randall Hyde's Write Great Code-Vol I, and reading Vol II, which are nice as refreshers on assembly and low level optimization as well as nice summaries of what modern compilers do and more importantly do not do although one might expect it.

The publicly available Software Optimization Guide for AMD64 Processors is also similar in a way, in that it provides a list of do's and dont's for the mentioned processors, but many things apply in general as well, the nice thing is that there is an example for each case.

Another fun thing is superoptimizers, and the fact that a perfect super optimization is actually a NP-Hard problem and that is of course not a surprise, the interesting thing about it is that actually most if not all fun games are also NP-Hard, since as soon as a game is found not to be NP-Hard it is considered 'solved', and it not really fun to play when one knows the solution,

this might explain why so many of us are attracted to optimization because it is actually NP-Hard (fun!). The same goes for AI and other game related technologies.

Basically all we are trying to do is to surf on the edge of the current technology and try to push the most out of it by using algorithms that were not possible before but are almost possible now if optimized.

Wednesday, October 29, 2008

GI - Photon Mapping - Post 7 - 1 Million Rays

I am in the process of adding ray-packet tracing to my ray tracer, that from now on shall be known by the silly name 'Photonizer' (as a reminder that the ultimate goal is a photon mapper).

I decided for the sake of 'going ahead faster' to support only triangles as primitives from now on (goodbye my ellipsoid test scene... sniff)

I changed the triangle intersector from Trumbore/Moller to Wald's Optimized projection.

I did this because I found it will be nicer to SIMDify (no cross products). A nice side effect however was that I also got 25% speed-up with the new intersection code.

After that I wrote a parallel version of the intersector that can take a 2x2 ray packet. This took a full day between researching, coding, going through visual studio SSE/SSE2 intrinsics documentation, some time was also wasted writing cpuid code for CPU feature detection.

I still did not parallelize the BVH traversal, but I was too eager to try it out, even with only primary rays and without shading, and I was extremely pleased with the result.

I got a 4.5x speedup rendering the cornell box brute force without any space partitioning, and for the first time I saw a 1M rays/sec stat. on my console (copy-paste: 1040062.855307 rays/sec.).

So although there is still work to do, this is the 1M for the record post.

I decided for the sake of 'going ahead faster' to support only triangles as primitives from now on (goodbye my ellipsoid test scene... sniff)

I changed the triangle intersector from Trumbore/Moller to Wald's Optimized projection.

I did this because I found it will be nicer to SIMDify (no cross products). A nice side effect however was that I also got 25% speed-up with the new intersection code.

After that I wrote a parallel version of the intersector that can take a 2x2 ray packet. This took a full day between researching, coding, going through visual studio SSE/SSE2 intrinsics documentation, some time was also wasted writing cpuid code for CPU feature detection.

I still did not parallelize the BVH traversal, but I was too eager to try it out, even with only primary rays and without shading, and I was extremely pleased with the result.

I got a 4.5x speedup rendering the cornell box brute force without any space partitioning, and for the first time I saw a 1M rays/sec stat. on my console (copy-paste: 1040062.855307 rays/sec.).

So although there is still work to do, this is the 1M for the record post.

Monday, October 27, 2008

GI - Photon Mapping - Post 6

Saturday, October 25, 2008

GI - Photon Mapping - Post 5

Basic Features

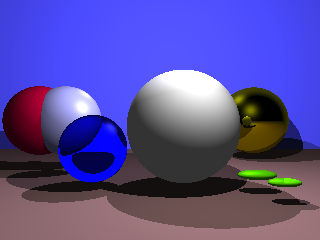

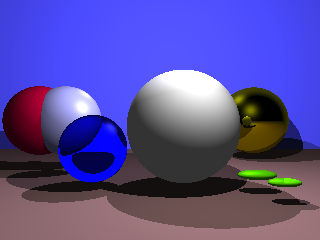

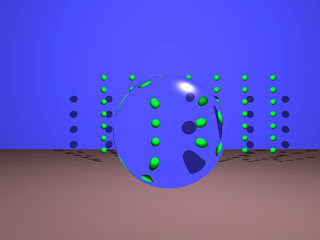

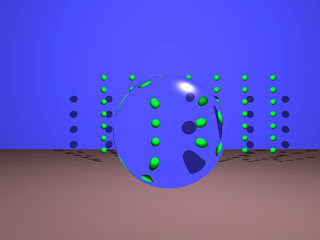

Here is a list of renders representing the still very basic state of the ray tracer.

you can see here flat planes, spheres, quadrics and triangles, points lights, flat shading, lambertian diffuse, blinn-phong specular, hard shadows, reflections, refractions, exposure, super sampling and wavefront .obj loading (using the code by 'Micah Taylor' a.k.a kixornet found here), and finally multi core rendering in 2 flavors (Task Thread Scheduler, OpenMP).

2 renders show artifacts (also known as surface acne).

Self Shadowing Artifacts

The 1st artifacts were caused by 'self shadowing', numerical imprecision causes the calculated intersection point to lie inside the surface it intersects, shooting a new ray to determine light visibility from the intersection point cause an intersection again with the surface itself, a common problem... There is more than 1 solution to the problem but I chose to avoid 'epsilons' whenever possible.

I solved this without epsilons in a way that works for primitives that do not self shadow, which is for the now the case of all the supported primitives:

when a ray-primitive intersect function is called on primitive (A), a flag is passed to indicate if the ray's origin was also obtained by intersection with (A), for flat surfaces like planes and triangles I directly conclude that there is no new intersection possible.

For Spheres and general quadrics I also use the flag, if the flag is false (intersection coming from another primitive) I switch to the normal intersection test (finding the smallest non negative root). However if the flag is true, I use a 'smarter' and a tiny bit more expensive algorithm, based on our knowledge that the ray's origin is on the surface, we have 3 cases based on the 2 possible roots (tMin and tMax)

1. we obtain 2 positive roots: this can only mean the imprecision pushed the intersection point into the primitive with the ray's direction pointing out of the primitive (most probably a refraction ray), therefore we return tMax.

2. we obtain 2 negative roots: this can only mean the imprecision pushed the intersection out of the primitive with the ray's direction pointing into the primitive (most probably a light or reflection), therefore there is no intersection.

3. we obtain roots with opposite signs: the point was pushed inside the primitive, in this case we signal an intersection only if the positive root is larger than the absolute value of the negative root.

This solves the problem for general quadrics (including spheres) without using epsilon or some iterative technique, of course this might not be feasible for other types of surfaces, if I add such surfaces later I will add other techniques and the technique will be chosen based on the primitive, or at least ... that's the current plan...

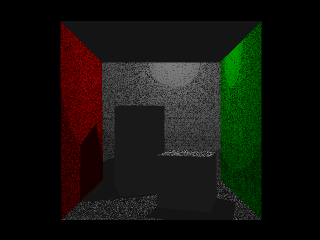

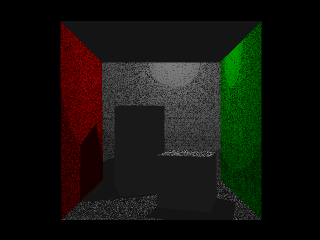

Big Float / Small Float Loss of precision Artifacts

The second kind of artifact is seen in the Test Cornell Box Render (Test because it uses one point light). The point lights are currently represented by spheres, this scene is 540 units in heigh, the light sphere is created with a radius of 0.1 units. The problem was occuring because, when trying to shoot a ray from a surface point toward the light, the sphere was missed at times, this was due to the fact that normalizing the distance (point to light) vector was causing enough loss or precision for the intersection tests with the sphere to report no intersection! My first idea was not to normalize the direction vector, this eliminated the artifact, but produced others, now, there were intersections being detected between the ray and close by triangles, there were only very few of the artifacts, but still, they were there, So I went back to normalizing the direction vector, and simply increased the radius of the light sphere. This is probably not the best solution, I will look into this some more ...

Here is a list of renders representing the still very basic state of the ray tracer.

you can see here flat planes, spheres, quadrics and triangles, points lights, flat shading, lambertian diffuse, blinn-phong specular, hard shadows, reflections, refractions, exposure, super sampling and wavefront .obj loading (using the code by 'Micah Taylor' a.k.a kixornet found here), and finally multi core rendering in 2 flavors (Task Thread Scheduler, OpenMP).

2 renders show artifacts (also known as surface acne).

Self Shadowing Artifacts

The 1st artifacts were caused by 'self shadowing', numerical imprecision causes the calculated intersection point to lie inside the surface it intersects, shooting a new ray to determine light visibility from the intersection point cause an intersection again with the surface itself, a common problem... There is more than 1 solution to the problem but I chose to avoid 'epsilons' whenever possible.

I solved this without epsilons in a way that works for primitives that do not self shadow, which is for the now the case of all the supported primitives:

when a ray-primitive intersect function is called on primitive (A), a flag is passed to indicate if the ray's origin was also obtained by intersection with (A), for flat surfaces like planes and triangles I directly conclude that there is no new intersection possible.

For Spheres and general quadrics I also use the flag, if the flag is false (intersection coming from another primitive) I switch to the normal intersection test (finding the smallest non negative root). However if the flag is true, I use a 'smarter' and a tiny bit more expensive algorithm, based on our knowledge that the ray's origin is on the surface, we have 3 cases based on the 2 possible roots (tMin and tMax)

1. we obtain 2 positive roots: this can only mean the imprecision pushed the intersection point into the primitive with the ray's direction pointing out of the primitive (most probably a refraction ray), therefore we return tMax.

2. we obtain 2 negative roots: this can only mean the imprecision pushed the intersection out of the primitive with the ray's direction pointing into the primitive (most probably a light or reflection), therefore there is no intersection.

3. we obtain roots with opposite signs: the point was pushed inside the primitive, in this case we signal an intersection only if the positive root is larger than the absolute value of the negative root.

This solves the problem for general quadrics (including spheres) without using epsilon or some iterative technique, of course this might not be feasible for other types of surfaces, if I add such surfaces later I will add other techniques and the technique will be chosen based on the primitive, or at least ... that's the current plan...

Big Float / Small Float Loss of precision Artifacts

The second kind of artifact is seen in the Test Cornell Box Render (Test because it uses one point light). The point lights are currently represented by spheres, this scene is 540 units in heigh, the light sphere is created with a radius of 0.1 units. The problem was occuring because, when trying to shoot a ray from a surface point toward the light, the sphere was missed at times, this was due to the fact that normalizing the distance (point to light) vector was causing enough loss or precision for the intersection tests with the sphere to report no intersection! My first idea was not to normalize the direction vector, this eliminated the artifact, but produced others, now, there were intersections being detected between the ray and close by triangles, there were only very few of the artifacts, but still, they were there, So I went back to normalizing the direction vector, and simply increased the radius of the light sphere. This is probably not the best solution, I will look into this some more ...

Thursday, October 23, 2008

Tuesday, October 14, 2008

Domain-independent nonlinear planning has been shown to be intractable (NP-hard).

One more reason to think that general high level AI middleware is far away I guess...

source:

http://www.cc.gatech.edu/faculty/ashwin/papers/er-08-09.pdf

don't know what NP-hard is? http://compgeom.cs.uiuc.edu/~jeffe/teaching/algorithms/notes/21-nphard.pdf

source:

http://www.cc.gatech.edu/faculty/ashwin/papers/er-08-09.pdf

don't know what NP-hard is? http://compgeom.cs.uiuc.edu/~jeffe/teaching/algorithms/notes/21-nphard.pdf

Thursday, October 9, 2008

Wednesday, October 8, 2008

GI - Photon Mapping - Update 4 (OpenMP)

I decided to make the code support 4 ways of handling multi-core systems:

It seems pretty well suited for the task, and for now I simply parallelized the render loop, allowing each horizontal line to be processed by a different thread.

I played around tuning it to my machine and test scenes, logically, I got best results by splitting the scene in 2: I have 2 processors and the load is pretty balanced in the scenes.

This of course does not scale well, it would be nice if it was possible to make the code more scalable (number of processors) and scene nature (load balance).

Simply increasing the task granularity (per example splitting the scene into more chunks, or even pixel by pixel) comes at the cost of more scheduling, which is not negligible at all, additionally scheduling directives for OpenMP are defined at compile time, yes, 'free lunch is over'....

I thought this would be better in OpenMP than in the DIY solution, but for now I have the feeling both have to be fine tuned to a specific type of machine and processor count.

OpenMP is 'standard' and takes care of all argument marshalling headaches, it also surely beats any experimental DIY in terms of robustness and stability, so unless really necessary it is of course the better choice. 'really necessary' depends on the specific needs, OpenMP can be too simple and too limiting making it unusable for many cases, but when it fits, it fits!

That said, I will still be trying to improve the OpenMP solution a bit before coding a basic TBB version.

- No Multi-Threading

- Do-It-Yourself (DIY) with all its disadvantages (using a multi-threaded task manager) which is what I already have

- OpenMP

- Intel TBB

It seems pretty well suited for the task, and for now I simply parallelized the render loop, allowing each horizontal line to be processed by a different thread.

I played around tuning it to my machine and test scenes, logically, I got best results by splitting the scene in 2: I have 2 processors and the load is pretty balanced in the scenes.

This of course does not scale well, it would be nice if it was possible to make the code more scalable (number of processors) and scene nature (load balance).

Simply increasing the task granularity (per example splitting the scene into more chunks, or even pixel by pixel) comes at the cost of more scheduling, which is not negligible at all, additionally scheduling directives for OpenMP are defined at compile time, yes, 'free lunch is over'....

I thought this would be better in OpenMP than in the DIY solution, but for now I have the feeling both have to be fine tuned to a specific type of machine and processor count.

OpenMP is 'standard' and takes care of all argument marshalling headaches, it also surely beats any experimental DIY in terms of robustness and stability, so unless really necessary it is of course the better choice. 'really necessary' depends on the specific needs, OpenMP can be too simple and too limiting making it unusable for many cases, but when it fits, it fits!

That said, I will still be trying to improve the OpenMP solution a bit before coding a basic TBB version.

Tuesday, October 7, 2008

The McCarthy Show (Building Great Teams)

http://www.mccarthyshow.com/

If this URL says nothign to you, IMMEDIATELY head there and start reading, starting with 'the Core'.

Examples of application are also at http://aigamedev.com/team-dynamics/core-protocols

This should have been a course in my university!

one could quote the whole thing, but I just selected this one to make you interested: "Asking in time of trouble means you waited too long to ask for help. Ask for help when you are

doing well."

If this URL says nothign to you, IMMEDIATELY head there and start reading, starting with 'the Core'.

Examples of application are also at http://aigamedev.com/team-dynamics/core-protocols

This should have been a course in my university!

one could quote the whole thing, but I just selected this one to make you interested: "Asking in time of trouble means you waited too long to ask for help. Ask for help when you are

doing well."

Monday, October 6, 2008

GI - Photon Mapping - Day 3 (Multi-Threading)

Today I added multi-threading to the Ray tracing renderer.

on my Dual-Core machine at work, the rendering time with multi-threading enabled takes a bit more than half the time needed with multi-threading off, this is of course excellent.

The absolute times are still irrelevant since the rendering itself is not yet optimized (no spatial subdivision).

I added multi-threading by allowing the renderer to split the render area to a configurable amount of regions, each region creating a RenderJob which is then sent to a TaskManager as a task.

The TaskManager in turn, has a configurable amount of Threads waiting to execute any virtual tasks they get, it is all pretty robust and thread-safe (where it needs to be).

Of course, since we are only rendering, the whole scene is 'read only' during the job, so there is not much to be done in terms of synchronization, ray tracing is pretty parallel out of the box.

on my Dual-Core machine at work, the rendering time with multi-threading enabled takes a bit more than half the time needed with multi-threading off, this is of course excellent.

The absolute times are still irrelevant since the rendering itself is not yet optimized (no spatial subdivision).

I added multi-threading by allowing the renderer to split the render area to a configurable amount of regions, each region creating a RenderJob which is then sent to a TaskManager as a task.

The TaskManager in turn, has a configurable amount of Threads waiting to execute any virtual tasks they get, it is all pretty robust and thread-safe (where it needs to be).

Of course, since we are only rendering, the whole scene is 'read only' during the job, so there is not much to be done in terms of synchronization, ray tracing is pretty parallel out of the box.

Sunday, October 5, 2008

GI - Photon Mapping - Day 2 (More Ray-Tracer Features)

I had some time to work on the Ray Tracer a bit more, I got specular, reflections, refractions and exposure working. I also added a simple camera control which enters a 'preview mode' that renders at slow but still interactive rates, this way I can move the camera and when I stop I get the scene rendered in normal (non-preview mode).

One thing I noticed is how easy it is to debug artifacts, just find the coordinates of the problem pixel and follow the ray...

One thing I am currently working on is removing any magical tolerances, I have seen such tolerances used in tutorials for cases such as: you have a ray colliding with a primitive, and you generate a new ray with the collision point as source but a new direction, you do not want the new ray to be detected as colliding with the source primitive. It is possible to solve this by using a small tolerance to move the collision point to the outside of the surface, I do not like that, because those tolerances are usually scene dependent, caused by the lack of floating point precision AND on the metrics and nature of the scene, that is why I like to avoid such work-arounds whenever possible even at the cost of some performance.

I changed the collision routines for the spheres to handle the cases cleanly without using any tolerances.

Next I will fix the plane intersection routines (for now all I have is spheres and planes) to handle the case as nicely.

After that, I will probably take a small break from adding 'features' and play around with some multi-core support for the ray tracer. Even if I did not add spatial subdivision yet, one important goal of this project is playing around with multi-core, so the sooner that happens the better, even if the 'single-core' version is not optimized yet.

A screenie for the record.

One thing I noticed is how easy it is to debug artifacts, just find the coordinates of the problem pixel and follow the ray...

One thing I am currently working on is removing any magical tolerances, I have seen such tolerances used in tutorials for cases such as: you have a ray colliding with a primitive, and you generate a new ray with the collision point as source but a new direction, you do not want the new ray to be detected as colliding with the source primitive. It is possible to solve this by using a small tolerance to move the collision point to the outside of the surface, I do not like that, because those tolerances are usually scene dependent, caused by the lack of floating point precision AND on the metrics and nature of the scene, that is why I like to avoid such work-arounds whenever possible even at the cost of some performance.

I changed the collision routines for the spheres to handle the cases cleanly without using any tolerances.

Next I will fix the plane intersection routines (for now all I have is spheres and planes) to handle the case as nicely.

After that, I will probably take a small break from adding 'features' and play around with some multi-core support for the ray tracer. Even if I did not add spatial subdivision yet, one important goal of this project is playing around with multi-core, so the sooner that happens the better, even if the 'single-core' version is not optimized yet.

A screenie for the record.

Tuesday, September 30, 2008

GI - Photon Mapping - Day 1 (Diffuse Scene)

Since it seems I will be having a bit free time soon, I decided to have a go at a 'Multi-core Global illumination using photon mapping' project, the goal is to write a photon mapper and then have some fun trying to make it make use of multiple cores on a PC (or Xbox 360 if XNA allows?).

I decided to start off with a ray tracer to use it as a base and go from there.

I used the WitchEngine (the engine I wrote for World of football) as a base.

It has more or less everything needed for math, camera, scene managment, and I will use it's KD-tree for acceleration as well later after I add ray shooting to it.

I started off by rendering to images using the freeimage library, and in the progress also got a tip to use the PixelToaster library from my friend/colleague Volker Arweiler who has been playing around with ray tracing as well.

after half a day's work, I have Super Sampling, diffuse (Lambertian) and hard shadows implemented.

So I am very sorry to have to show you yet another super basic ray tracing screenshot.

I decided to start off with a ray tracer to use it as a base and go from there.

I used the WitchEngine (the engine I wrote for World of football) as a base.

It has more or less everything needed for math, camera, scene managment, and I will use it's KD-tree for acceleration as well later after I add ray shooting to it.

I started off by rendering to images using the freeimage library, and in the progress also got a tip to use the PixelToaster library from my friend/colleague Volker Arweiler who has been playing around with ray tracing as well.

after half a day's work, I have Super Sampling, diffuse (Lambertian) and hard shadows implemented.

So I am very sorry to have to show you yet another super basic ray tracing screenshot.

Wednesday, September 3, 2008

Good software design nicely summarized in 4 simple (but deep) points

- Create crisp and resilient abstractions.

- Maintain a good separation of concerns.

- Create a balanced distribution of responsibilities.

- Focus on simplicity.

Friday, August 15, 2008

Case Study: Optimizing a Search Algorithm (for AI assisted Footballer switching in WOF)

Motivation

in WOF, We usually seek perfection. In the case of AI assisted Footballer control switching, we wanted to do better than other comparable games. This means, when a human player is controlling a footballer, and the ball is shot or passed, control has to switch to the best candidate. This does not mean, the first footballer in the ball's direction! Even the smallest thought effort gives us a long list of cases where this does not work: lobs, balls unreachable because of ball speed and footballer reaction time, target footballer being busy doing other important actions... (even ball deflections from the goal bar are handled 'correctly' by WOF!)

the AI also had to correctly handle multiple human players in the same team and not allow ping- pong switching between them.

Results

a shy video showing the results (partially) can be seen here:

Non Linearity and Searching

Since we are in 3 dimensions, the speed of the ball being non linear, and the position even much less so, the fact that the footballers speeds can be approximated as linear is not of great help.

Therefore, the correct algorithm had to rely on a search. in it's basic unoptimized form, it should calculate the ball's complete trajectory (colliding with static obstacles only), and for each potential footballer, find the portions of the trajectory (and yes they are portions because of ball height changing) when the ball can be intercepted. Then choose among those based on mix of criteria: first to intercept, wait time after arriving to position, effort needed to arrive to position...

As with every search algorithm, it makes lots of sense trying to optimize it, we do not want this single 'basic' task to wreak havoc on the whole game's performance.

Full Ball Trajectory Calculation

The full ball trajectory is very useful, and we use it for many different purposes, that is why, we already had it implemented. It is calculated using exactly the same physics engine used in game, it is therefore very exact. The collisions are only performed with static objects, it does not make sense to include dynamic ones since they are ... dynamic.

Because of our performant physics, collision detection and spatial subdivision, we can afford to calculate the full ball trajectory every time the ball is shot.

The calculation is optimized in 2 ways. The 1st optimization is splitting the calculation among a configurable amount of frames, since we never need the whole trajectory instantaneously, in practice we obtain it fast enough for all of our purposes. The second optimization is the intelligent choice of sampling technique. Instead of some naive sampling, we use an error metric that allows us to have the least number of samples possible, while keeping the quality of the samples so, that one can linearly interpolate the position of the ball based on a sample's start velocity, until the next sample, keeping the error less than the ball's radius.

Search Optimizations

As with all searches, we can do much better than brute force. And since this search will be continuously executed since the footballers move, it better be performant!

One optimization was made by adding functionality to the trajectory calculation phase, allowing it, on the fly, to split the trajectory into multiple sections, depending on the height of the ball (and therefore it's reachability by footballers), allowing 'too high' sections to be completely skipped during the search.

As for the search itself, we used temporal caching, and a number of WOF specific optimizations.

For temporal caching, we hold on to the last found intercept point, and only check for it's validity using it's type (footballer will wait for ball at intercept, ball will wait for footballer at intercept, no intercept, ...) and the amount of time elapsed since the intercept's first analysis time. This means in one case per example, if we found a valid intercept point where the footballer has to wait 0.5 seconds for the ball after arriving to his position, we can, until 0.5 secs pass, 'simply' recheck for the intercept's validity (while keeping an eye on where the footballer is going) and this is much cheaper than starting a new search.

Of course, samples are invalidated as times passes by, thus making the number of samples to search smaller the further the time advances, but this is obvious.

The implementation itself produces (crudely estimated) 2500 lines of source code (excluding headers), split among multiple files in a nicely organized design, that allows reusing it in a clean way for a number of other purposes (like the scripted AI using it to make it's decisions).

Conclusion

when considering searches, make sure they are unavoidable, if you are sure they are, understand why, implement them, optimize, profile and enjoy the hopefully nice results.

in WOF, We usually seek perfection. In the case of AI assisted Footballer control switching, we wanted to do better than other comparable games. This means, when a human player is controlling a footballer, and the ball is shot or passed, control has to switch to the best candidate. This does not mean, the first footballer in the ball's direction! Even the smallest thought effort gives us a long list of cases where this does not work: lobs, balls unreachable because of ball speed and footballer reaction time, target footballer being busy doing other important actions... (even ball deflections from the goal bar are handled 'correctly' by WOF!)

the AI also had to correctly handle multiple human players in the same team and not allow ping- pong switching between them.

Results

a shy video showing the results (partially) can be seen here:

Non Linearity and Searching

Since we are in 3 dimensions, the speed of the ball being non linear, and the position even much less so, the fact that the footballers speeds can be approximated as linear is not of great help.

Therefore, the correct algorithm had to rely on a search. in it's basic unoptimized form, it should calculate the ball's complete trajectory (colliding with static obstacles only), and for each potential footballer, find the portions of the trajectory (and yes they are portions because of ball height changing) when the ball can be intercepted. Then choose among those based on mix of criteria: first to intercept, wait time after arriving to position, effort needed to arrive to position...

As with every search algorithm, it makes lots of sense trying to optimize it, we do not want this single 'basic' task to wreak havoc on the whole game's performance.

Full Ball Trajectory Calculation

The full ball trajectory is very useful, and we use it for many different purposes, that is why, we already had it implemented. It is calculated using exactly the same physics engine used in game, it is therefore very exact. The collisions are only performed with static objects, it does not make sense to include dynamic ones since they are ... dynamic.

Because of our performant physics, collision detection and spatial subdivision, we can afford to calculate the full ball trajectory every time the ball is shot.

The calculation is optimized in 2 ways. The 1st optimization is splitting the calculation among a configurable amount of frames, since we never need the whole trajectory instantaneously, in practice we obtain it fast enough for all of our purposes. The second optimization is the intelligent choice of sampling technique. Instead of some naive sampling, we use an error metric that allows us to have the least number of samples possible, while keeping the quality of the samples so, that one can linearly interpolate the position of the ball based on a sample's start velocity, until the next sample, keeping the error less than the ball's radius.

Search Optimizations

As with all searches, we can do much better than brute force. And since this search will be continuously executed since the footballers move, it better be performant!

One optimization was made by adding functionality to the trajectory calculation phase, allowing it, on the fly, to split the trajectory into multiple sections, depending on the height of the ball (and therefore it's reachability by footballers), allowing 'too high' sections to be completely skipped during the search.

As for the search itself, we used temporal caching, and a number of WOF specific optimizations.

For temporal caching, we hold on to the last found intercept point, and only check for it's validity using it's type (footballer will wait for ball at intercept, ball will wait for footballer at intercept, no intercept, ...) and the amount of time elapsed since the intercept's first analysis time. This means in one case per example, if we found a valid intercept point where the footballer has to wait 0.5 seconds for the ball after arriving to his position, we can, until 0.5 secs pass, 'simply' recheck for the intercept's validity (while keeping an eye on where the footballer is going) and this is much cheaper than starting a new search.

Of course, samples are invalidated as times passes by, thus making the number of samples to search smaller the further the time advances, but this is obvious.

The implementation itself produces (crudely estimated) 2500 lines of source code (excluding headers), split among multiple files in a nicely organized design, that allows reusing it in a clean way for a number of other purposes (like the scripted AI using it to make it's decisions).

Conclusion

when considering searches, make sure they are unavoidable, if you are sure they are, understand why, implement them, optimize, profile and enjoy the hopefully nice results.

Wednesday, August 13, 2008

Smoothing Character Collision Response using Quadratic Equations

Motivation

In WOF, we wanted characters to smoothly collide with the world (goal, advertisement banners, other characters, ...) and therefore 'slide' next to obstacles and not get blocked by them.

This might not be a necessity per se, since in a football game, the footballers have no business being outside the pitch, but this would be nice to have to reuse in other games, it was also something I had not done before so I wanted get my hands dirty with a more general solution then just character / character collision where bounding volumes could be used.

I decided to use the real polygon geometry of the obstacles, and not any specially prepared collision proxies, this meant the algorithm needed to handle colliding simultaneously with a number of objects / triangles and still making the best of it and sliding in the correct direction whenever it would be the right thing to do.

Results Video

A video of the nice results can be seen here:

http://www.youtube.com/watch?v=zBxg_Mp3md0

Usual Approach(es)

So as a first step of course, research, this was some time ago, so I will not post any links, you can search yourselves, but mostly people used nicely formed collision volumes such as spheres, capsules, cylinders. the advantages being per example being able to rotate in place without the collision volume changing, and therefore not causing collisions because of rotations.

This is important, because, if a character is moving, as in changing it's position, we know in which direction it moved, and we can therefore use that direction to make the collision response look credible, however for turning, things are a bit more complicated, and your usual collision tests help you by telling you how to translate volumes to resolve the collision, but not how to rotate, except if we search using bisection, which is expensive, and it seems there is no way around it for now.

This is again related to continuous collision detection (CCD), an active research topic, again linear is much easier than angular, and this of course makes sense, and has an explanation, but this is out of our scope right now, but as usual, the net is there for those who want to know more. A first search points to"Continuous Collision Detection Progress and Challenges" by 'Gino van der Bergen', who is known for his research in this area. The Bullet physics forums are probably also a good place for this topic, among many others.

Chosen Solution

Now our math library already had collision tests for many primitives, almost all a game would ever need, except capsules. I did not like the idea of using spheres because they would be too big, and we wanted the characters to be able to come very close before colliding. Of course, the spheres could be made smaller, which then has other disadvantages, basically, the sphere did not fit the real volume closely enough for my taste, (there are many collision detection tutorials / papers that discuss fitting properties of collision shapes). At the time, I chose to use Axis Aligned bounding boxes that do not change their volume when the footballers changes directions, additionally, OBB were being used for accelerating collision detection between the ball and the footballer's limbs using the physics engine, this means we have two types of bounding volumes for 2 different purposes.

Capsules would have been an equally good choice, or even a better one. But the 'technique' I used to resolve collision applies to both.

Plan of Attack

So what do we really want to achieve? we want the character to slide along obstacles and not be blocked by them when it makes sense.

When does it make sense? it makes sense when the obstacle does not directly oppose the direction the character is moving. so except if the obstacle directly opposes the movement direction, there is possibility to slide, this is the basic idea. Of course, we have more than one obstacle, and since we want to use the raw polygons of the obstacles, we will have more than 1 triangle per obstacle, additionally in WOF, obstacles can be volumes, so in the end, we will have a number of triangles and volumes to check against, and we will need to find a sliding direction giving the original movement direction and the triangles and volumes.

There is more than one way to solve this, but I will describe the final solution (and I think in WOF's context the best solution) that I came up with.

'Algorithm'

For all triangles and volumes, I used a swept volume collision detection to gather all the contact normals between the moving volume and the world's objects, for triangles I used only the face normals, this prevents the character from getting stuck because of slight penetrations of polygonal objects, as for cases where we really have a collision with a triangle edge, this works as well, assuming all triangles in an object are connected, whenever we have contact with an edge we also have contact with 2 or more triangle faces that meet at that edge, so we can get away with using triangle face normals and no edges (or points) assuming all triangles are connected, this way we happily slide along polygonal objects even if we hit edges because of slight penetrations and we will still be correct when we hit real edges because in that case more than 1 face normal will be taken into account. (some pictures at this point would be nice, but maybe I will do then later and update this post).

So as i said, I gather all contact normals as explained above, then I use quadratic programming to find a direction that satisfies the following constraint: has a non-zero negative dot product with all the gathered normals, or in other words a direction along which we can move freely without penetrating all current contacting obstacles. I take the resulting direction and if it is zero or if it has the same direction as the movement vector (solvedDir dot moveDir > 0), I block the moving object, sending it to where it was at the last step, I use a fixed step approach to physics, thus making this always look ok, otherwise I use the direction obtained from the solution as the new movement direction, optionally projecting the current movement translation onto it (thus reducing the movement speed), the new direction is not used 'after' the current movement is applied, the contacts are gathered during a 'check potential contacts using the current direction', so this means the new direction (translation) is used instead of the original direction.

Luckily, we have a Convex Quadraitc Prorgramming Problem at hand, this means it can be solved in reasonable time, I used the QuadProg++ library, (which uses the Goldfarb-Idnani dual method), but I modified it to optimize it's memory allocation behaviour

There are some more details to take into consideration, because in WOF per example, in some modes (tackling per example), we allow footballers to penetrate each other, so we need filters to specify which triangles / objects to use for collision detection, there are also issues about how to make swept collision detection behave nicely in all problematic cases, all those issues are solved and work well in WOF as one can see in the video, but the point here was using quadratic programming to solve for the 'sliding direction'. As for performance, there were no problems until now, I will spare you the profiling numbers though, they are only relevant for WOF.

In WOF, we wanted characters to smoothly collide with the world (goal, advertisement banners, other characters, ...) and therefore 'slide' next to obstacles and not get blocked by them.

This might not be a necessity per se, since in a football game, the footballers have no business being outside the pitch, but this would be nice to have to reuse in other games, it was also something I had not done before so I wanted get my hands dirty with a more general solution then just character / character collision where bounding volumes could be used.

I decided to use the real polygon geometry of the obstacles, and not any specially prepared collision proxies, this meant the algorithm needed to handle colliding simultaneously with a number of objects / triangles and still making the best of it and sliding in the correct direction whenever it would be the right thing to do.

Results Video

A video of the nice results can be seen here:

http://www.youtube.com/watch?v=zBxg_Mp3md0

Usual Approach(es)

So as a first step of course, research, this was some time ago, so I will not post any links, you can search yourselves, but mostly people used nicely formed collision volumes such as spheres, capsules, cylinders. the advantages being per example being able to rotate in place without the collision volume changing, and therefore not causing collisions because of rotations.

This is important, because, if a character is moving, as in changing it's position, we know in which direction it moved, and we can therefore use that direction to make the collision response look credible, however for turning, things are a bit more complicated, and your usual collision tests help you by telling you how to translate volumes to resolve the collision, but not how to rotate, except if we search using bisection, which is expensive, and it seems there is no way around it for now.

This is again related to continuous collision detection (CCD), an active research topic, again linear is much easier than angular, and this of course makes sense, and has an explanation, but this is out of our scope right now, but as usual, the net is there for those who want to know more. A first search points to"Continuous Collision Detection Progress and Challenges" by 'Gino van der Bergen', who is known for his research in this area. The Bullet physics forums are probably also a good place for this topic, among many others.

Chosen Solution

Now our math library already had collision tests for many primitives, almost all a game would ever need, except capsules. I did not like the idea of using spheres because they would be too big, and we wanted the characters to be able to come very close before colliding. Of course, the spheres could be made smaller, which then has other disadvantages, basically, the sphere did not fit the real volume closely enough for my taste, (there are many collision detection tutorials / papers that discuss fitting properties of collision shapes). At the time, I chose to use Axis Aligned bounding boxes that do not change their volume when the footballers changes directions, additionally, OBB were being used for accelerating collision detection between the ball and the footballer's limbs using the physics engine, this means we have two types of bounding volumes for 2 different purposes.

Capsules would have been an equally good choice, or even a better one. But the 'technique' I used to resolve collision applies to both.

Plan of Attack

So what do we really want to achieve? we want the character to slide along obstacles and not be blocked by them when it makes sense.

When does it make sense? it makes sense when the obstacle does not directly oppose the direction the character is moving. so except if the obstacle directly opposes the movement direction, there is possibility to slide, this is the basic idea. Of course, we have more than one obstacle, and since we want to use the raw polygons of the obstacles, we will have more than 1 triangle per obstacle, additionally in WOF, obstacles can be volumes, so in the end, we will have a number of triangles and volumes to check against, and we will need to find a sliding direction giving the original movement direction and the triangles and volumes.

There is more than one way to solve this, but I will describe the final solution (and I think in WOF's context the best solution) that I came up with.

'Algorithm'

For all triangles and volumes, I used a swept volume collision detection to gather all the contact normals between the moving volume and the world's objects, for triangles I used only the face normals, this prevents the character from getting stuck because of slight penetrations of polygonal objects, as for cases where we really have a collision with a triangle edge, this works as well, assuming all triangles in an object are connected, whenever we have contact with an edge we also have contact with 2 or more triangle faces that meet at that edge, so we can get away with using triangle face normals and no edges (or points) assuming all triangles are connected, this way we happily slide along polygonal objects even if we hit edges because of slight penetrations and we will still be correct when we hit real edges because in that case more than 1 face normal will be taken into account. (some pictures at this point would be nice, but maybe I will do then later and update this post).

So as i said, I gather all contact normals as explained above, then I use quadratic programming to find a direction that satisfies the following constraint: has a non-zero negative dot product with all the gathered normals, or in other words a direction along which we can move freely without penetrating all current contacting obstacles. I take the resulting direction and if it is zero or if it has the same direction as the movement vector (solvedDir dot moveDir > 0), I block the moving object, sending it to where it was at the last step, I use a fixed step approach to physics, thus making this always look ok, otherwise I use the direction obtained from the solution as the new movement direction, optionally projecting the current movement translation onto it (thus reducing the movement speed), the new direction is not used 'after' the current movement is applied, the contacts are gathered during a 'check potential contacts using the current direction', so this means the new direction (translation) is used instead of the original direction.

Luckily, we have a Convex Quadraitc Prorgramming Problem at hand, this means it can be solved in reasonable time, I used the QuadProg++ library, (which uses the Goldfarb-Idnani dual method), but I modified it to optimize it's memory allocation behaviour

There are some more details to take into consideration, because in WOF per example, in some modes (tackling per example), we allow footballers to penetrate each other, so we need filters to specify which triangles / objects to use for collision detection, there are also issues about how to make swept collision detection behave nicely in all problematic cases, all those issues are solved and work well in WOF as one can see in the video, but the point here was using quadratic programming to solve for the 'sliding direction'. As for performance, there were no problems until now, I will spare you the profiling numbers though, they are only relevant for WOF.

Tuesday, August 12, 2008

It's all so easy... (Brain stack dump)

For some time now, I have the feeling that all problems seem to carry no difficulty for me.

So I took some time to think about to find out if it's an illusion, a realtiy, or pure naive stupidity.

What I will begin with is not the first idea link in the chain of thoughts that I went through while thinking about this, but I still found it would be good to start with.

Of course, as with all topics, it is important to first define the used terms.

one dictionary definition of 'difficult' is: "not easy to do, understand, or solve". this definition uses the word easy, so this does not really help much, since I am searching for a deeper definition of difficult.

Looking up the definition for easy I get: "not difficult; simple", which is of course, also not helpful, since it uses the word 'difficult'. Instead of looking up simple (to probably find it using 'difficult' or 'easy'), I stop here and decide that the problem is really worth investigating!

So let us finally start: do you think the question 'what is the result of 1 + 1' is a difficult question for a 2 year old? yes? I think no...

I think there is a clear method to tackle any problem, and that there is no such thing as a difficult problem. The method is: identify the problem, search for a known method to solve the problem, if non is found, search for a way to solve the problem.

Pffff you say, we all know that and it does not make any problems less difficult. Sure it does.

Going back to the '1 + 1' question, let us apply our method. 1st "Identify the problem", ok the problem consits or the parts '1' '+' and the answer we are thinking of is '2'. Can we explain to a 1 year old what '1' is? no. so the problem is not difficult at all, we cannot even 'feed' the problem into the 1 year old, who's brain does not have yet any way of internally representing the 1st symbol '1', so the problem is non existent from the point of view of the 1 year old, but not 'difficult'.

Now what about asking the same question to a 6 year old who is good at math. easy u say? I disagree as well. when we ask what is '1 + 1' we get the answer '2' yes, but if we ask why ... we are stuck. the 6 year old did not solve the problem, he just looked up the answer in his memory, or counted on his fingers, but I do not consider the problem solved. To really solve the problem let us ask ourselves why does 1 + 1 = 2. Stupid question? not at all, 1 + 1 could be 10 like we see printed on popular nerd T-shirts, this would be the case if we are talking binary. 1 + 1 could also be 0, this would be if we are asking how many apples is 1 orange + 1 banana. So again, using our method, we 1st have to identify the problem. what does 1 + 1 actually mean, before we know that, it is useless to start solving. so, painful but necessary, what is '1' ? 1 could be anything, but abstracted it is a number, this is still not enough, what is a number? ... it is also a number using the decimal system, not binary, not hexadecimal. so 1 is one unit of 'anything', and also very important when we say 1+1 we do not many 1 of anything + 1 of anything, no, we mean 1 of anything + 1 of the same anything. of course we also have to define + (for which I do not have a clear definition without using a concrete 'something' like 1+1 oranges is having 1 unit of orange in some container and then adding on more unit of orange into the same container). And all of this is useless is we do not define what 2, 3, 4, 5, 6, 7, 8, 9, 10, (which I also do not find easy to explain in abstract terms without using oranges) and a method to count in decimal. Only after all this can we say that we have identified the problem. After having the problem identified, and by using the definition of how the decimal system works, and what it's symbols and their combinatorial representations mean (99, 1054), we find that 1+1 is basically by definition, 2. So the problem is not 'difficult' in itself, there is a clear method for solving it.

Now what if we try to explain this whole definition to a 15 year old, for 1 whole year, and that person still does not understand it? that's interesting point to think about. It means that people are like Computers, they have processors with capacity and speed, the processors are analogue but they are still processors, they are flexible and can grow and change, but they are still processors, and so I think that when a problem's definition is above the capacity of the brain that is supposed to 'solve' it, it is useless to talk about problem difficulty, the problem simply can't even be 'fed' into the brain.

There is also the case where that 15 year old would understand the problem, but needs 5 seconds to come with an answer, instead of 1 second, this would simply mean the capacity to accept the definition is there, but the processing speed is slow.

It still doesn't me the problem is 'difficult', not even relatively.

(Speaking about processors, here are some very cool mechanical ones)

Now after identifying the problem, our 2nd step says 'search for a known method to solve the problem', this could be using a method that we already know ourselves (saved in the brain), or doing research to find that people already found solutions to our problem or similar ones.

This would be per example the case when asking 'write a skeletal animation library' to someone who just started to learn '3D graphics', after identifying the problem, he would do some research and find that other people have done this countless times and written about it, he would then use his research to write the library. Does this mean the problem is 'difficult' for a 3D beginner ? again, no, we would be tempted to say that it is difficult for the beginner and easy for an experienced 3D Graphics Software engineer, but no, there is no 'difficulty' involved here, there is simply search time envolved that the experienced engineer need not do, but did at some point in the past, again it is not 'difficult'.

Another example is asking 'write a very robust game physics engine', is this difficult? again, I say no, because of the same reasons, defining what is exactly meant by a physics engine is, and what makes it robust, could fill a several papers, to someone who has never written a physics engine or used one, a lot of time would be needed working on step 1, does this mean that the step is difficult ? no, it simply needs, time, additionally, if that someone has no math experience, more papers would be added, and there are also many topics that would also justify a good amount of papers until reaching the end of the 1st step, but we are still following a clear recursive method, there is no magic nor difficuly envoled, it just takes time depending on the brain speed, assuming the capacity is there. after that would come step 2 also consuming lots of time, and if robust means more robust than the best current engines, we would finally come to step 3, if there are no known solution to the 'very robust' problem for problematic cases like huge world dimensions, very fast movements and rotations, huge mass ratios, etc... then we would need to 'search' for solutions, and a search is really a search, it is like an A* or similar in AI, there is no way to circumvent searches when the problems are new, it could also be that the problem is NP hard ( there are many places to read about P=NP problems, one I recommend though is the book "Artificial Intelligence, A Modern Approach, Stuart J. Russell, Peter Norvig"). Simplfied, NP hard means that until now, nobody managed to prove that we can do anything better than 'searching' to find a solution for such problems.

Searching would be done by using all known heursitics, and theoretical information, and trying plausible solutions until one is found or not found, finding out there is no solution is also a solution. Some robustness problems are per example very easy to solve if we use much better data types, like huge 512 bit floating or fixed point numbers instead of our usual floats or doubles, but then we hit the limits of our current technological limits (speed and memory of our current computers) and the real time constraints a game physics engine must satisfy, but all this still does not mean the problem is difficult, there is an obvious way to solve it, the solution might end up with a search that has no time bounds, or with a conclusion that the problem is not solvable given the constraints, but this does not mean 'difficult'. What is important though is finding out that sometimes, a search is needed, and that is of course the case for all 'new' problems, which are usually generated by solutions to old problems, or requiring improvements, and this is how the beautiful train of technology evolution rolls by, taking all of us enthusiasts on a nice ride.

Being the brain dump it is, there is no conclusion for this post, this topic still needs more thinking, and maybe after a few more related posts, a structured conclusion will come out.

So I took some time to think about to find out if it's an illusion, a realtiy, or pure naive stupidity.

What I will begin with is not the first idea link in the chain of thoughts that I went through while thinking about this, but I still found it would be good to start with.

Of course, as with all topics, it is important to first define the used terms.

one dictionary definition of 'difficult' is: "not easy to do, understand, or solve". this definition uses the word easy, so this does not really help much, since I am searching for a deeper definition of difficult.

Looking up the definition for easy I get: "not difficult; simple", which is of course, also not helpful, since it uses the word 'difficult'. Instead of looking up simple (to probably find it using 'difficult' or 'easy'), I stop here and decide that the problem is really worth investigating!

So let us finally start: do you think the question 'what is the result of 1 + 1' is a difficult question for a 2 year old? yes? I think no...

I think there is a clear method to tackle any problem, and that there is no such thing as a difficult problem. The method is: identify the problem, search for a known method to solve the problem, if non is found, search for a way to solve the problem.

Pffff you say, we all know that and it does not make any problems less difficult. Sure it does.

Going back to the '1 + 1' question, let us apply our method. 1st "Identify the problem", ok the problem consits or the parts '1' '+' and the answer we are thinking of is '2'. Can we explain to a 1 year old what '1' is? no. so the problem is not difficult at all, we cannot even 'feed' the problem into the 1 year old, who's brain does not have yet any way of internally representing the 1st symbol '1', so the problem is non existent from the point of view of the 1 year old, but not 'difficult'.

Now what about asking the same question to a 6 year old who is good at math. easy u say? I disagree as well. when we ask what is '1 + 1' we get the answer '2' yes, but if we ask why ... we are stuck. the 6 year old did not solve the problem, he just looked up the answer in his memory, or counted on his fingers, but I do not consider the problem solved. To really solve the problem let us ask ourselves why does 1 + 1 = 2. Stupid question? not at all, 1 + 1 could be 10 like we see printed on popular nerd T-shirts, this would be the case if we are talking binary. 1 + 1 could also be 0, this would be if we are asking how many apples is 1 orange + 1 banana. So again, using our method, we 1st have to identify the problem. what does 1 + 1 actually mean, before we know that, it is useless to start solving. so, painful but necessary, what is '1' ? 1 could be anything, but abstracted it is a number, this is still not enough, what is a number? ... it is also a number using the decimal system, not binary, not hexadecimal. so 1 is one unit of 'anything', and also very important when we say 1+1 we do not many 1 of anything + 1 of anything, no, we mean 1 of anything + 1 of the same anything. of course we also have to define + (for which I do not have a clear definition without using a concrete 'something' like 1+1 oranges is having 1 unit of orange in some container and then adding on more unit of orange into the same container). And all of this is useless is we do not define what 2, 3, 4, 5, 6, 7, 8, 9, 10, (which I also do not find easy to explain in abstract terms without using oranges) and a method to count in decimal. Only after all this can we say that we have identified the problem. After having the problem identified, and by using the definition of how the decimal system works, and what it's symbols and their combinatorial representations mean (99, 1054), we find that 1+1 is basically by definition, 2. So the problem is not 'difficult' in itself, there is a clear method for solving it.

Now what if we try to explain this whole definition to a 15 year old, for 1 whole year, and that person still does not understand it? that's interesting point to think about. It means that people are like Computers, they have processors with capacity and speed, the processors are analogue but they are still processors, they are flexible and can grow and change, but they are still processors, and so I think that when a problem's definition is above the capacity of the brain that is supposed to 'solve' it, it is useless to talk about problem difficulty, the problem simply can't even be 'fed' into the brain.

There is also the case where that 15 year old would understand the problem, but needs 5 seconds to come with an answer, instead of 1 second, this would simply mean the capacity to accept the definition is there, but the processing speed is slow.

It still doesn't me the problem is 'difficult', not even relatively.

(Speaking about processors, here are some very cool mechanical ones)

Now after identifying the problem, our 2nd step says 'search for a known method to solve the problem', this could be using a method that we already know ourselves (saved in the brain), or doing research to find that people already found solutions to our problem or similar ones.

This would be per example the case when asking 'write a skeletal animation library' to someone who just started to learn '3D graphics', after identifying the problem, he would do some research and find that other people have done this countless times and written about it, he would then use his research to write the library. Does this mean the problem is 'difficult' for a 3D beginner ? again, no, we would be tempted to say that it is difficult for the beginner and easy for an experienced 3D Graphics Software engineer, but no, there is no 'difficulty' involved here, there is simply search time envolved that the experienced engineer need not do, but did at some point in the past, again it is not 'difficult'.

Another example is asking 'write a very robust game physics engine', is this difficult? again, I say no, because of the same reasons, defining what is exactly meant by a physics engine is, and what makes it robust, could fill a several papers, to someone who has never written a physics engine or used one, a lot of time would be needed working on step 1, does this mean that the step is difficult ? no, it simply needs, time, additionally, if that someone has no math experience, more papers would be added, and there are also many topics that would also justify a good amount of papers until reaching the end of the 1st step, but we are still following a clear recursive method, there is no magic nor difficuly envoled, it just takes time depending on the brain speed, assuming the capacity is there. after that would come step 2 also consuming lots of time, and if robust means more robust than the best current engines, we would finally come to step 3, if there are no known solution to the 'very robust' problem for problematic cases like huge world dimensions, very fast movements and rotations, huge mass ratios, etc... then we would need to 'search' for solutions, and a search is really a search, it is like an A* or similar in AI, there is no way to circumvent searches when the problems are new, it could also be that the problem is NP hard ( there are many places to read about P=NP problems, one I recommend though is the book "Artificial Intelligence, A Modern Approach, Stuart J. Russell, Peter Norvig"). Simplfied, NP hard means that until now, nobody managed to prove that we can do anything better than 'searching' to find a solution for such problems.

Searching would be done by using all known heursitics, and theoretical information, and trying plausible solutions until one is found or not found, finding out there is no solution is also a solution. Some robustness problems are per example very easy to solve if we use much better data types, like huge 512 bit floating or fixed point numbers instead of our usual floats or doubles, but then we hit the limits of our current technological limits (speed and memory of our current computers) and the real time constraints a game physics engine must satisfy, but all this still does not mean the problem is difficult, there is an obvious way to solve it, the solution might end up with a search that has no time bounds, or with a conclusion that the problem is not solvable given the constraints, but this does not mean 'difficult'. What is important though is finding out that sometimes, a search is needed, and that is of course the case for all 'new' problems, which are usually generated by solutions to old problems, or requiring improvements, and this is how the beautiful train of technology evolution rolls by, taking all of us enthusiasts on a nice ride.

Being the brain dump it is, there is no conclusion for this post, this topic still needs more thinking, and maybe after a few more related posts, a structured conclusion will come out.

Monday, August 11, 2008

quote "Cargo Cult Methodology: How Agile Can Go Terribly, Terribly Wrong"

whenever an article contains something to this:

"All of us team members were survivors of another much larger project. That project had been done with outsourcing to a CMM Level 5 organization, with great care in the methodology at our end and with careful detailed project management overall. The project consumed tens of millions of dollars and years of overtime. It failed utterly. "

I just HAVE to read it ...

http://www.cio.com/article/print/442264

related: http://www.ddj.com/architect/209600574?cid=http://www.informationweek.com/maindocs/archive.htm

related: http://brucefwebster.com/2008/06/16/anatomy-of-a-runaway-it-project/

which has a very good part about 'documenting the unnecessary just for the sake of it'

"I truly believe that in the past we have been too ambitious in describing process, in adopting too much process, and in documentation. The reality is that even if people write a lot, very few people will ever read it. Thus the trend towards light will sustain. However, it is easy to be light. The trick is to be as light as possible but not lighter. I believe you will find our work on EssUP and EssWork new and fresh. "

related: http://www.ddj.com/architect/209602001?cid=http://www.informationweek.com/maindocs/archive.htm

" what to do when your stakeholders still insist on having a "precise estimate" at the beginning of the project."

"All of us team members were survivors of another much larger project. That project had been done with outsourcing to a CMM Level 5 organization, with great care in the methodology at our end and with careful detailed project management overall. The project consumed tens of millions of dollars and years of overtime. It failed utterly. "

I just HAVE to read it ...

http://www.cio.com/article/print/442264

related: http://www.ddj.com/architect/209600574?cid=http://www.informationweek.com/maindocs/archive.htm

related: http://brucefwebster.com/2008/06/16/anatomy-of-a-runaway-it-project/

which has a very good part about 'documenting the unnecessary just for the sake of it'

"I truly believe that in the past we have been too ambitious in describing process, in adopting too much process, and in documentation. The reality is that even if people write a lot, very few people will ever read it. Thus the trend towards light will sustain. However, it is easy to be light. The trick is to be as light as possible but not lighter. I believe you will find our work on EssUP and EssWork new and fresh. "

related: http://www.ddj.com/architect/209602001?cid=http://www.informationweek.com/maindocs/archive.htm

" what to do when your stakeholders still insist on having a "precise estimate" at the beginning of the project."

Thursday, August 7, 2008

mixing php and the c++ preprocessor?

Idea

here I go again, thinking and complaining about the well known limits of c++ meta programming,

the most known complaint candidate being lack of partial template specialization for functions.

Meta-programming seems to me to be very close to web server side scripting a la php per example. Because in the end, this is what the meta in meta-programming means...

generating source code via 'meta' code. well what about using something like a php processor to meta-program c++ files??

Potential

One could do something like #include "Vector.php.hpp?dims=3" this would include a meta programmed header for a 3d vector, one advantage is the the resulting header would be plain c++, it would be possible generate functions that take 3 arguments, like per example

VectorT::VectorT(Type value1, Type value2, Type value3) which is currently impossible with standard meta-programming.

actually the generated class could directly be generated as Vector3 or Vector3D, where ususally

it would be Vector<3>, of course this can be typedef'd and I am a big fan of typedef's they are great for writing fire and forget, self refactoring code. But anyway, it would be possible...

Taking it to the extreme we would probably be able to do something like this:

#include "Vector.php.hpp?class=FunkyVector3D, dims=3", and have a resulting class called FunkyVector3D! changing names of classes at will, would that be a maintenance disaster? or simply more responsability?

Issues

Planning will definitely be needed so that things don't get out of control and turn into a cryptic mess.

I suspect though, that without some perprocessor features to help with this, issues will arise (name collisions, linker errors, classes not found...).

Time for bed though, I will think about it some more when I have more time, but this could definitely be a cool experiment.

here I go again, thinking and complaining about the well known limits of c++ meta programming,

the most known complaint candidate being lack of partial template specialization for functions.

Meta-programming seems to me to be very close to web server side scripting a la php per example. Because in the end, this is what the meta in meta-programming means...

generating source code via 'meta' code. well what about using something like a php processor to meta-program c++ files??

Potential

One could do something like #include "Vector.php.hpp?dims=3" this would include a meta programmed header for a 3d vector, one advantage is the the resulting header would be plain c++, it would be possible generate functions that take 3 arguments, like per example

VectorT::VectorT(Type value1, Type value2, Type value3) which is currently impossible with standard meta-programming.

actually the generated class could directly be generated as Vector3 or Vector3D, where ususally

it would be Vector<3>, of course this can be typedef'd and I am a big fan of typedef's they are great for writing fire and forget, self refactoring code. But anyway, it would be possible...

Taking it to the extreme we would probably be able to do something like this:

#include "Vector.php.hpp?class=FunkyVector3D, dims=3", and have a resulting class called FunkyVector3D! changing names of classes at will, would that be a maintenance disaster? or simply more responsability?

Issues

Planning will definitely be needed so that things don't get out of control and turn into a cryptic mess.

I suspect though, that without some perprocessor features to help with this, issues will arise (name collisions, linker errors, classes not found...).

Time for bed though, I will think about it some more when I have more time, but this could definitely be a cool experiment.

Monday, August 4, 2008

marriage to c++ (also known as 'c++, elegant static arrays' part 2)

Motivation

I received feedback for the 'c++ static arrays' post from numerous friend developers, who are all unfortunately too lazy to post a comment.

Some were scared by the meta-programming heaviness, while others said it's an overkill.

I fed their feedback into my thinking machine and came to the following conclusion: the difference between us is that I am married to c++, while the skeptics have only been dating it, and it makes a big difference even if they have been dating for years.

Analysis

This kind of situation happens whenever you spend a lot of time in a relationship with something or somebody, be it c++ in my case, your car, your flat, your partner, your friend and EVEN your new IPhone! the situation always evolves the same way: in the beginning, you enjoy the new benefits, you only see the cool features, you are happy it works and you are satisfied, but eventually, you start taking all the good things for granted, and start to only see the small annoyances, which transform into big annoyances, because they become all what you see, it is like taking a small coin, and sticking it into your eye, even though it is small, it is all what you see, and it is very painful. I could turn this into a human relationship post, but I will just say it is different because people have feelings, and trying to change others is in many cases egoistic, in other rarer cases the right thing, but in most cases can hurt feelings, but let's close it here and concentrate on c++, which until now, has not developed any feelings.

Allow me to change you...

The 'issues' can be divided into 2 types:

There are the things that I don't like when done the "copy from book/tutorial/demo" way, and this does not mean that the book/tutorial/demo is bad, of course not! but things have to be put in context. You cannot simply take whatever code was there and stick it into a codebase specially if it is large and complex, the culprit here it turns out is the programmer, but a beginner programmer is automatically excused, he is happy enough that things are working and that's fine, I am happy. But I see many experienced programmers, who should know better, and know that they have to write code that has minimal dependencies and needs minimal changes, and that is robust and very good at detecting problems at run time, and not just for the sake of it, or because of some obscure obsession about code elegance, but becuase this is wasted time and money in any term except the short term, I am not happy!

Most such issues can be easily solved however, by using meta-programming per example, and by thinking long term, how long is long term is also an important skill by the way, but let us skip that and move to the second type.

This is the tougher type, with issues that are impossible to solve wihout the help of the compiler, (except when killing performance or causing other big disasters is no problem). In these cases we can just hope and wait for things like c++0x, tr1 and friends to come to the rescue, one example mentioned in the previous post is the 'auto' keyword which allows code to be made less dependent, and mroe change resilient among other benefits.

But unsolved compiler dependent issues remain (themes for future rants), seemingly meaningless to most ... except the married.

I received feedback for the 'c++ static arrays' post from numerous friend developers, who are all unfortunately too lazy to post a comment.

Some were scared by the meta-programming heaviness, while others said it's an overkill.

I fed their feedback into my thinking machine and came to the following conclusion: the difference between us is that I am married to c++, while the skeptics have only been dating it, and it makes a big difference even if they have been dating for years.

Analysis