Saturday, July 10, 2010

Wednesday, June 16, 2010

Monday, May 31, 2010

Homegrown math study group.

Tom Lahore and me, and I am sure many others, find that there are not enough practical problems forcing us non-PhD / non-academic to delve into math as much as we like. It is a chicken and egg problem and we have strongly felt during the last years that we should do something about it and never had the discipline to do it consistently and therefore usefully. We will give this another shot at http://sites.google.com/site/77neuronsprojectperelman/

We will start by using the Khan academy videos (1 to 2 videos per week) and discuss them during sundays possibly using some online conference tool and use the wiki to share our thoughts. The Site is public so feel free to do it with us. Will we fail and stop in a month? I don't know ... we hope the fact that we do it in a group and that we use very low commitment (1 to 2 videos per week) will help.

We will start by using the Khan academy videos (1 to 2 videos per week) and discuss them during sundays possibly using some online conference tool and use the wiki to share our thoughts. The Site is public so feel free to do it with us. Will we fail and stop in a month? I don't know ... we hope the fact that we do it in a group and that we use very low commitment (1 to 2 videos per week) will help.

Saturday, May 22, 2010

That's what happens when you are researching human locomotion.

You find sentences like:

"Modern humans exhibit a much higher body fat content and reduced relative muscle mass than their ancestral counterparts, trends that are seen in domesticated animals generally (Allen and Mackey, 1982; O’Dea, 1991; Clutton-Brock, 1999)." (http://jeb.biologists.org/cgi/content/full/204/18/3235).

Saturday, April 10, 2010

More time = shorter letter

Yet another extremely valuable addition to my neuronal network. (Thanks to R.M)

How true!

Wednesday, January 20, 2010

Anything must be something, except for nothing ...

We all have encountered and enjoyed seemingly mind convoluting statements like:

"This statement is false" or "I am a liar", such statements are basically 'unprovable'.

I have been reading about this while investigating logic and it's roots, mathematics, number theory, Goedel .... Some older posts are related to this.

Recently I decided to write down one such sentence that comes pretty naturally whenever you start thinking about what 'something' is.

Here is the complete sentence:

"Anything (any 'thing') must be 'something', except for nothing, which is of course also 'something', but on a different level of thingness, which goes up and down into itself to infinity."

The sentence flows pretty naturally when you are making it up, you start with:

"Anything must be something"

But then your mind remembers that there is an exception to that so you add:

"Except for nothing"

Again your mind jumps in, it cannot accept the void, this 'nothing' also fits the mind's intuitive notion of 'something', in the end, we just mentioned it, so it must be something, and this is where the fun starts, so you add:

"Nothing is also some kind of 'something'"

But then you fell uncomfortable, now nothing is nothing and something at the same time, but let's simply go on trying to explain how we feel about that:

"But on a different level of thingness"

The mind is trying to say that this nothing on one level (of 'thingness') is 'something', yes, but not on that same level), but now we have two levels, we needed those to resolve the paradox of nothing, but that's a problem, because on that new level we can probably do the same, and we can also think that the lower level is an upper level for some other level which has a 'nothing'. So we add:

"which goes up and down into itself to infinity."

So here we have it, nothing, something, a paradox and infinity all at the same time, plus the inability to make logical sense out of even the simplest everyday construct.

If you have been reading some Set theory, Number theory, Russel, Hilbert, Whitehead, Turing and friends all this would seem all too familiar: nothing could be the more formal 'Empty Set {}' ... and it's a long ride after that. So this is my layman's version of what all these geniuses and many others spent years thinking about, if that makes you interested, I suggest you read the most excellent book: "Godel, Escher, Bach: An Eternal Golden Braid"

I also found it interesting that this multi-level hierarchy of rules that we make up to reflect on a lower system from a higher system (escaping to the meta level as some colleagues would say) is inherent in the way we think, it is even mentioned in a seemingly unrelated game design book "Theory of Fun" and also a recurrent topic in our AI related discussions.

Next I will be investigating in more detailed the 'Completeness' part of this whole topic coming from Goedel's famous "Incompleteness theorem" that seems to be touching the physical limits of our brain and melting them in the core. More specifically completeness relative to what? Logic itself? probably, but, but ....

.

Wednesday, December 30, 2009

The answer from above

While we game AI programmers noodle with our gross simplfications and regrettable /understandably unavoidable but also fun and challenging real-time performance constraints, we occasionally look up for any new answers from above, normally we are too busy to stay up to date, but it's the holidays and I am bored, I have no ps3 devkit here, nor a PC with a dev. environment to do some brainless coding... so I had to be brainful and start reading and so I stumbled upon this: "the currently available theories do not explain or engender anything resembling human-level general intelligence" what is meant here is theories coming from Information-processing psychology e.g: Cognitive Science and Cognitive Neuroscience. (source: http://www.cs.umd.edu/~nau/cmsc722/)

I wonder when such theories will start to be discovered and what kind of processing power we will have at the time and if they will good enough to allow the ones who will be peeking there at the time and spotting low hanging fruits to become famous applying them to video games (and other applications) being again, at the right time and place.

Sunday, November 29, 2009

Now that's bad performance code! once and for all....

Translating an idea into a piece of code is an over-constrained problem, just like many other problems.

To decide how to code something, make a list of all points you think are important for it (maintainability, performance, easy to read by me, easy to reuse by me, flexible, many other pieces will depend on it, multi-platform, multi-compiler, link fast, compile fast, short names for faster typing, easy to read/understand/reuse for my colleagues, easy to read/understand/reuse for my clients, cryptic to prove I am 'old school' and can write assembly and you should be scared of discussing it with me, totally abstract to prove I don't care about performance and want to make a point that premature optimization is the source of all evil, totally lean and mean to prove that non-premature optimization is the road to a lame duck... you name it! I don't care what you put in there, the list can be very long and can include anything you like), score the points in your list based on their utility for the piece of code to be written with the very welcome possibility of zero utility for some of them (makes it less constrained).

You cannot compare apples to oranges? (e.g: maintainability vs. performance) ? yes you can (Yes son, you can compare apples to oranges... )! on top of that, you have no choice...

Finally, code/make compromises to maximize the total score, that's all there is to it and being an over-constrained problem for anything none-trivial it won't be completely obvious.

But the problem is clear, no need to call a programming style 'too old school' or another one 'too abstract' or 'too object oriented'. The higher the total score, the better ... that's it.

Now if u do not have the necessary coding skills, you might generate code that has a total score that is not the maximum possible ... but that is another topic.

.

To decide how to code something, make a list of all points you think are important for it (maintainability, performance, easy to read by me, easy to reuse by me, flexible, many other pieces will depend on it, multi-platform, multi-compiler, link fast, compile fast, short names for faster typing, easy to read/understand/reuse for my colleagues, easy to read/understand/reuse for my clients, cryptic to prove I am 'old school' and can write assembly and you should be scared of discussing it with me, totally abstract to prove I don't care about performance and want to make a point that premature optimization is the source of all evil, totally lean and mean to prove that non-premature optimization is the road to a lame duck... you name it! I don't care what you put in there, the list can be very long and can include anything you like), score the points in your list based on their utility for the piece of code to be written with the very welcome possibility of zero utility for some of them (makes it less constrained).

You cannot compare apples to oranges? (e.g: maintainability vs. performance) ? yes you can (Yes son, you can compare apples to oranges... )! on top of that, you have no choice...

Finally, code/make compromises to maximize the total score, that's all there is to it and being an over-constrained problem for anything none-trivial it won't be completely obvious.

But the problem is clear, no need to call a programming style 'too old school' or another one 'too abstract' or 'too object oriented'. The higher the total score, the better ... that's it.

Now if u do not have the necessary coding skills, you might generate code that has a total score that is not the maximum possible ... but that is another topic.

.

AI room + blackboard = geek art

PS3 game/FPS AI research

Monday, October 19, 2009

Tech-radio silence

It has been some time since I posted anything but I am still alive and still scratching my brain the whole time, the reason for the radio-silence is that since September 2009 I am an AI coder at Guerrilla Games, it is a great experience.

I was going to take some pics but I found this:

http://ps3life.nl/nieuws/4528-een-kijkje-rond-en-in-guerrilla-studios/ this is how it looks like in here currently.

I was also interviewed at AiGameDev (http://aigamedev.com/insider/event/event-career-journey/), if you want to laugh at how sleepy the tone of my voice makes you will be probably be able to see it when it's posted as a video capture sometime in the near future.

More to come...

I was going to take some pics but I found this:

http://ps3life.nl/nieuws/4528-een-kijkje-rond-en-in-guerrilla-studios/ this is how it looks like in here currently.

I was also interviewed at AiGameDev (http://aigamedev.com/insider/event/event-career-journey/), if you want to laugh at how sleepy the tone of my voice makes you will be probably be able to see it when it's posted as a video capture sometime in the near future.

More to come...

Friday, August 21, 2009

Irrationals on the border of existence and sqrt(2)

I have been reading a lot about abstract math, what numbers really are and are not, set theoretic number theory and related. The set theoretic approach even if I did not dig into the deepest depths of it, allowed me to be able to logically justify to myself the existence and nature of numbers.

I even bothered my wife (who could not care less) about the beauty I found in the irrational number: square root of 2, what I told her is the following:

I will prove to you how beautiful is math and that we should be grateful for all the people who contributed to it along the centuries of human thinking. I will give you a calculator and you can only use to to multiply, now with no other references, find me the exact square root of 2. Of course, one would proceed to multiply 1.1*1.1= 1.21 then 1.5*1.5=2.25, hence coming to the conclusion that 1.1 < style="font-weight: bold;">

-------------------------------------------------------------------------------------------------

The following reductio ad absurdum argument showing the irrationality of √2 is less well-known. It uses the additional information 2 > √2 > 1 so that 1 > √2 − 1 > 0.

One could almost argue such numbers do not really exist, in the end, they are not called crazy/irrational (and have been fought) for no reason! The way I see it is that they don't at least as written out numbers, they do exist if we set a desired precision, this is why I am liking what I call a 'computationally theoretic number theory', no idea if it exists but you get my point. By setting a precision we can work with those things. One could argue that the number exists and that it's representation is sqrt(2), but this is not a number, the way I see it is that this is a rational (existing) number combined with an algorithm (or call it a function) that can transform this into another in this case irrational number, so either we imprecisely write down a number that approximates it to a given precision or we represent it as an algortihm (sqrt) and data (2) that expand to this 'inexisting' number, this is all layman terms and layman talk, and mathematicians will laugh, but I am recording these thoughts because, since I am a bit satisfied with what I know about this now, I will stop digging and go back to the actual reason I started to look into math again, and that is to solidify my math needed for a self designed auto-didactic machine learning 'course' in my free time. Another sqrt(2) existence thought occured to me in the car last weekend, imagine you have a piece of rubber of length 1, now you take it and stretch it to length 2, did you pass by sqrt(2)? you must have, so it exists? can one measure it? again only to some precision ... (even on the atomic/quantum level). almost mind boggling, this infinity of numbers, but it also makes sense, we allowed for it the moment we allowed ourselves to have a coma and numbers after it, after that recursively you have infinities of infinities of infinities ... but all still allow me not to explode when I look at the set theoretic number theory, basically it is ordering and number of things (in layman, mathematician laugh terms) ... between 1 and 2 there is an infinity of numbers, same as between 1 and 1.1 and 1 and 1.0001 ... in any case ... back to less mind boggling and much more practical stuff, in the spirit of the way ppl have been using numbers since ages for practical matter and not even really understanding what they are. and let me reiterate, please excuse the layman :( he's just trying to make sense of it within a very limited amount of time.

Addendum:

* bourbaki, one of my favorite persons for math discussions, does not think this post is utter non-sense and he just pointed me to:

http://en.wikipedia.org/wiki/List_of_paradoxes, http://en.wikipedia.org/wiki/Continuum_hypothesis

-------------------------------------------------------------------------------------------------

Set theory is the branch of mathematics

Mathematics is the study of quantity, structure, space, change, and related topics of pattern and form. Mathematicians seek out patterns whether found in numbers, space, natural science, computers, imaginary abstractions, or elsewhere....

that studies sets, which are collections of objects. Although any type of object can be collected into a set, set theory is applied most often to objects that are relevant to mathematics.

The modern study of set theory was initiated by Cantor

Georg Ferdinand Ludwig Philipp Cantor was a Germany mathematician, born in Russia. He is best known as the creator of set theory, which has become a foundations of mathematics in mathematics....

and Dedekind in the 1870s. After the discovery of paradoxes

Naive set theory is one of several theories of sets used in the discussion of the foundations of mathematics. The informal content of this naive set theory supports both the aspects of mathematical sets familiar in discrete mathematics , and the everyday usage of set theory concepts in most contemporary mathematics....

in informal set theory, numerous axiom systems were proposed in the early twentieth century, of which the Zermelo–Fraenkel axioms

Zermelo?Fraenkel set theory with the axiom of choice, commonly abbreviated ZFC, is the standard form of axiomatic set theory and as such is the most common foundations of mathematics....

, with the axiom of choice

In mathematics, the axiom of choice, or AC, is an axiom of set theory. Informally put, the axiom of choice says that given any collection of bins, each containing at least one object, it is possible to make a selection of exactly one object from each bin, even if there are infinite set many bins and there is no "rule" for which object t...

, are the best-known.

Set theory, formalized using first-order logic

First-order logic is a formal deductive system used in mathematics, philosophy, linguistics, and computer science. It goes by many names, including: first-order predicate calculus , the lower predicate calculus, the language of first-order logic or predicate logic....

, is the most common foundational system for mathematics.

----------------------------------------------------------------------

Some References:

* http://www-groups.dcs.st-and.ac.uk/~history/HistTopics/Beginnings_of_set_theory.html

* http://www.absoluteastronomy.com/topics/Naive_set_theory

* http://en.wikipedia.org/wiki/Square_root_of_2#Proofs_of_irrationality

* The essence of discrete mathematics book

* ...

.

I even bothered my wife (who could not care less) about the beauty I found in the irrational number: square root of 2, what I told her is the following:

I will prove to you how beautiful is math and that we should be grateful for all the people who contributed to it along the centuries of human thinking. I will give you a calculator and you can only use to to multiply, now with no other references, find me the exact square root of 2. Of course, one would proceed to multiply 1.1*1.1= 1.21 then 1.5*1.5=2.25, hence coming to the conclusion that 1.1 < style="font-weight: bold;">

-------------------------------------------------------------------------------------------------

The following reductio ad absurdum argument showing the irrationality of √2 is less well-known. It uses the additional information 2 > √2 > 1 so that 1 > √2 − 1 > 0.

- Assume that √2 is a rational number. This would mean that there exist positive integers m and n with n ≠ 0 such that m/n = √2. Then m = n√2 and m√2 = 2n.

- We may assume that n is the smallest integer so that n√2 is an integer. That is, that the fraction m/n is in lowest terms.

- Then

- Since 1 > √2 − 1 > 0, it follows that n > n(√2 − 1) = m − n > 0.

- So the fraction m/n for √2, which according to (2) is already in lowest terms, is represented by (3) in strictly lower terms. This is a contradiction, so the assumption that √2 is rational must be false.

One could almost argue such numbers do not really exist, in the end, they are not called crazy/irrational (and have been fought) for no reason! The way I see it is that they don't at least as written out numbers, they do exist if we set a desired precision, this is why I am liking what I call a 'computationally theoretic number theory', no idea if it exists but you get my point. By setting a precision we can work with those things. One could argue that the number exists and that it's representation is sqrt(2), but this is not a number, the way I see it is that this is a rational (existing) number combined with an algorithm (or call it a function) that can transform this into another in this case irrational number, so either we imprecisely write down a number that approximates it to a given precision or we represent it as an algortihm (sqrt) and data (2) that expand to this 'inexisting' number, this is all layman terms and layman talk, and mathematicians will laugh, but I am recording these thoughts because, since I am a bit satisfied with what I know about this now, I will stop digging and go back to the actual reason I started to look into math again, and that is to solidify my math needed for a self designed auto-didactic machine learning 'course' in my free time. Another sqrt(2) existence thought occured to me in the car last weekend, imagine you have a piece of rubber of length 1, now you take it and stretch it to length 2, did you pass by sqrt(2)? you must have, so it exists? can one measure it? again only to some precision ... (even on the atomic/quantum level). almost mind boggling, this infinity of numbers, but it also makes sense, we allowed for it the moment we allowed ourselves to have a coma and numbers after it, after that recursively you have infinities of infinities of infinities ... but all still allow me not to explode when I look at the set theoretic number theory, basically it is ordering and number of things (in layman, mathematician laugh terms) ... between 1 and 2 there is an infinity of numbers, same as between 1 and 1.1 and 1 and 1.0001 ... in any case ... back to less mind boggling and much more practical stuff, in the spirit of the way ppl have been using numbers since ages for practical matter and not even really understanding what they are. and let me reiterate, please excuse the layman :( he's just trying to make sense of it within a very limited amount of time.

Addendum:

* bourbaki, one of my favorite persons for math discussions, does not think this post is utter non-sense and he just pointed me to:

http://en.wikipedia.org/wiki/List_of_paradoxes, http://en.wikipedia.org/wiki/Continuum_hypothesis

-------------------------------------------------------------------------------------------------

Set theory is the branch of mathematics

Mathematics

Mathematics is the study of quantity, structure, space, change, and related topics of pattern and form. Mathematicians seek out patterns whether found in numbers, space, natural science, computers, imaginary abstractions, or elsewhere....

that studies sets, which are collections of objects. Although any type of object can be collected into a set, set theory is applied most often to objects that are relevant to mathematics.

The modern study of set theory was initiated by Cantor

Georg Cantor

Georg Ferdinand Ludwig Philipp Cantor was a Germany mathematician, born in Russia. He is best known as the creator of set theory, which has become a foundations of mathematics in mathematics....

and Dedekind in the 1870s. After the discovery of paradoxes

Naive set theory

Naive set theory is one of several theories of sets used in the discussion of the foundations of mathematics. The informal content of this naive set theory supports both the aspects of mathematical sets familiar in discrete mathematics , and the everyday usage of set theory concepts in most contemporary mathematics....

in informal set theory, numerous axiom systems were proposed in the early twentieth century, of which the Zermelo–Fraenkel axioms

Zermelo–Fraenkel set theory

Zermelo?Fraenkel set theory with the axiom of choice, commonly abbreviated ZFC, is the standard form of axiomatic set theory and as such is the most common foundations of mathematics....

, with the axiom of choice

Axiom of choice

In mathematics, the axiom of choice, or AC, is an axiom of set theory. Informally put, the axiom of choice says that given any collection of bins, each containing at least one object, it is possible to make a selection of exactly one object from each bin, even if there are infinite set many bins and there is no "rule" for which object t...

, are the best-known.

Set theory, formalized using first-order logic

First-order logic

First-order logic is a formal deductive system used in mathematics, philosophy, linguistics, and computer science. It goes by many names, including: first-order predicate calculus , the lower predicate calculus, the language of first-order logic or predicate logic....

, is the most common foundational system for mathematics.

----------------------------------------------------------------------

Some References:

* http://www-groups.dcs.st-and.ac.uk/~history/HistTopics/Beginnings_of_set_theory.html

* http://www.absoluteastronomy.com/topics/Naive_set_theory

* http://en.wikipedia.org/wiki/Square_root_of_2#Proofs_of_irrationality

* The essence of discrete mathematics book

* ...

.

Saturday, July 25, 2009

At the end of the day, he would still be staring at the same blank sheet of paper.

Did it ever happen to you that you would sit to try to solve a new problem, and the more you would think about it the less it would make sense to you? if you would do that at your desk, would you then be considered non-productive? if you were a game developer be it technical, artistic or manager, sitting there and not typing for hours without making any progress would that be bad? well, Bertrand Russell, one of the most famous logicians of all times did exactly that, so you are ok :)

S = {x : x is a set and x !∈ x}.

In other words, S is the set of all sets that do not contain themselves.

In more 'naive' words:

* In Seville, there’s a barber who shaves all those people who do not shave themselves. Does the

barber shave himself or not? This is known as the “Barber of Seville problem”.

* Imagine a card. On one side is written, “The statement on the other side of this card is true.” and

on the other side is written, “The statement on the other side of this card is false.”

Bertrand Russell, one of the most famous logicians ever, struggled with this problem for a long time. In his autobiography, he describes just how hard he found the problem. Every morning, he said, he would sit down at his desk with a blank piece of paper in front of him. At the end of the day, he would still be staring at the same blank sheet of paper.

Russell’s final resolution to the problem is described in his “Principia Mathematica”, written with Alfred North Whitehead, in which he introduced a “Theory of Types” to get around his paradox. The basic idea was this: sets cannot contain themselves....

http://www.geometer.org/mathcircles/nothing.pdf

.

S = {x : x is a set and x !∈ x}.

In other words, S is the set of all sets that do not contain themselves.

In more 'naive' words:

* In Seville, there’s a barber who shaves all those people who do not shave themselves. Does the

barber shave himself or not? This is known as the “Barber of Seville problem”.

* Imagine a card. On one side is written, “The statement on the other side of this card is true.” and

on the other side is written, “The statement on the other side of this card is false.”

Bertrand Russell, one of the most famous logicians ever, struggled with this problem for a long time. In his autobiography, he describes just how hard he found the problem. Every morning, he said, he would sit down at his desk with a blank piece of paper in front of him. At the end of the day, he would still be staring at the same blank sheet of paper.

Russell’s final resolution to the problem is described in his “Principia Mathematica”, written with Alfred North Whitehead, in which he introduced a “Theory of Types” to get around his paradox. The basic idea was this: sets cannot contain themselves....

http://www.geometer.org/mathcircles/nothing.pdf

.

Tuesday, July 21, 2009

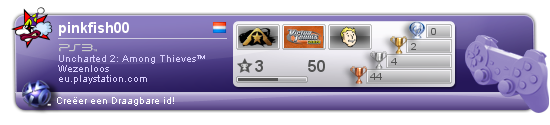

My steam gamer card, join to talk AI while blasting baddies :P

Yes son, you can compare apples to oranges...

One of the things that bothered me while tweaking and tuning the Keltis AI heuristics, was that ultimately things sometimes boiled down to the need to compare apples to oranges, unfortunately I do not remember the exact details and I am too lazy to dig them up, but I know that I had to compare values that I was not able to reduce to a common unit to measure by (like risk per example), it was really a matter of preference, this is not a new problem, and with my head in the details, I failed to notice the obvious, this is an old topic called utility that economists have been using for decades, of course, as usual, I said 'aha' just after getting my head out of the details and shipping.

It was no big deal though, I ended up using utility without knowing it.

Utility is 'the' way to compare apples to oranges, but what brings me to today's rant is that I remembered this while reading in the context of my ongoing current research in applying Reinforcement Learning to Animation planning.

The question in question is about a very valid question [ :) :D :P ] about the 'essence' of Reinforcement Learning (similar to http://rlai.cs.ualberta.ca/RLAI/rewardhypothesis.html) :

Is it sensible to treat all preferences as numeric rewards on a single scale? Theoretically, yes. There is a theorem (North [4]) that if you believe four fairly simple axioms about preferences, then you can derive the existence of a real-valued utility function. (The only mildly controversial axiom is substitutability: that if you prefer A to B, then you must prefer a coin flip between A and C to a coin flip between B and C.) Practically, it depends. Users often find it hard to articulate their preferences as numbers. (Example: you have to design the controller for a nuclear power plant. How many dollars is a human life worth?)source: http://www.eecs.umich.edu/~baveja/RLMasses/node5.html#SECTION00032000000000000000

I could not find the original in free electronic format: "D. W. North. A tutorial introduction to decision theory. IEEE Transactions on Systems Man and Cybernetics, SSC-4(3), Sept. 1968. "

If anyone can provide it I would be grateful, it is always very insightful to read about the essence of these things, this usually involves reading very old papers, and from my experience it is always worth it, it gives lots of confidence when applying things later and when doubts appear, because much thought and critical thinking went into each and every 'fact' we take today for granted, and for too naive tomorrow.

.

Saturday, July 11, 2009

Jad the Naive Mathematician, the absurdity of logic

Here I present my brain, it has been learning and evolving for some time, and recently, it noticed that, logically, the math it thought makes sense, actually doesn't.

The source of 'Math'

This goes some time back into the past, when I suddenly felt the urge to see where Math starts, because logically, and this is something I remember was the base of proving stuff, you need to base yourself on something that is true to prove something else. Anybody who knows a little bit about this knows that this directly leads to Axioms, Occam's razor, Goedel and co...

Useless education

Funny we have been thinking we know our very basic math, but we really do not even know that.

Even the pythagorean theorem seems not logical looking at it this way. Looking at proofs, the proofs themselves either use geometric manipulation of squares, triangles making assertions about areas, and some of those proofs came from periods were an area was something intuitive and not really formalized, come to think of it the concept of area itself is pretty much elusive, and looking for the rigorous math definition leads you to Reimann and others, and that's pretty recent in history. What's more annoying, I made it through school and a Bachelor in Engineering and I never once heard of them. What is even more annoying, I felt I knew what an 'area' is although, if I had thought critically and logically, I would have came ot the conclusion that there is something elusive about it, just like I did recently.

All of this post comes after lots of going back to trying to understand the roots of math, using wikipedia and google, some of this are listed in the bottom of the post.

Proof of a proof

One nice idea from this quest is Goedel and his Incompleteness theorem, naively for me right now it means you need to start from something to make any proofs, and that something you started from cannot be proved. I will not go back and read the details, but while taking a shower just now, I became curious as to how Goedel proved this, did he use an axiom as a base, if this axiom was removed, not even this could be proven? This got me to think about what logic is, and about the 'axioms' of logic. Logic seems to be something the brain can very easily accept and use as a base. Again going back to Engineering, much of what is left is the logic. But why? and what is logic, isn't it absurd by itself? what is the logic that logic is based on? Why does the brain readily accept it? (without 'proof').

A group of 'things', excluding 'Neo, the source'

This got me to realize that there is a certain group of things, that all fall into some category for which I don't have a name: Logic (needing logic to make sense), time (continuous/discrete), infinity, zero (1 over infinity!), space and it's size both endless and not being absurd (same for time). All these things feel like one and the same, or belonging to one category. We end up accepting them and even using them, but few of us really grasp them.

Think versus. Grasp

I also remembered vaguely something that I think Einstein said about things a human brain will never grasp, comparing to a table with eyes looking down never being able to see what is above it (I am not sure about the exactness of any of this). But what I recently found interesting, is the fact that we are able to think about these things, even though we might not be able to understand them (by construction?), why this separation? why can't we only think about things we can understand? Does this boundary mean something? and what?

Dump and live on

I wrote this post mainly for one reason: get it off my brain to free it for thinking about more practical stuff.

Feel free to express your opinion about this at

http://forums.aigamedev.com/showthread.php?p=15004#post15004

Some of the references

http://www.mathacademy.com/pr/prime/articles/fta/index.asp?LEV=&TBM=&TAL=&TAN=&TBI=&TCA=&TCS=&TDI=&TEC=&TFO=&TGE=&TGR=&THI=&TNT=&TPH=&TST=&TTO=&TTR=&TAD=

http://www.mathacademy.com/pr/prime/articles/irr2/index.asp

http://www.google.de/search?q=proof+square+root+of+2+is+irrational&ie=utf-8&oe=utf-8&aq=t&rls=org.mozilla:en-US:official&client=firefox-a

http://en.wikipedia.org/wiki/Well-order

http://en.wikipedia.org/wiki/Infinite_descent

http://en.wikipedia.org/wiki/Square_root_of_2

http://en.wikipedia.org/wiki/Rational_number

http://eu.wiley.com/WileyCDA/WileyTitle/productCd-0470211520.html

http://en.wikipedia.org/wiki/Commensurability_(mathematics)

http://www.boost.org/doc/libs/1_37_0/libs/math/doc/sf_and_dist/html/math_toolkit/special/ellint/ellint_intro.html

http://en.wikipedia.org/wiki/Elliptic_integral

http://sci.tech-archive.net/Archive/sci.math/2006-09/msg04719.html

http://books.google.de/books?id=RM1D3mFw2u0C&pg=PA7&lpg=PA7&dq=%22rigorous+definition+of+area%22&source=bl&ots=jiarfVKaP5&sig=OAi9X-H7Hnp92BdfIuiIA911KSc&hl=en&ei=jJdXSomENIed_AahldSdCQ&sa=X&oi=book_result&ct=result&resnum=7

http://www.amazon.co.uk/gp/offer-listing/0133459438/ref=dp_olp_1?ie=UTF8&qid=1247256551&sr=8-1

http://www.amazon.com/gp/product/images/0486439461/ref=dp_image_0?ie=UTF8&n=283155&s=books

http://www.amazon.com/s/ref=nb_ss_b?url=search-alias%3Dstripbooks&field-keywords=Discrete+Mathematics&x=0&y=0

http://www.mathkb.com/Uwe/Forum.aspx/math/16463/Concept-of-measure-in-undergraduate-mathematics

http://www.google.de/search?hl=en&safe=off&client=firefox-a&rls=org.mozilla%3Aen-US%3Aofficial&hs=iW1&num=100&q=%22rigorous+definition+of+area%22&btnG=Search

http://www.youtube.com/results?search_query=The+Fundamental+Theorem+of+Calculus&search_type=&aq=f

http://www.youtube.com/watch?v=MOnnMlMM70Q&feature=PlayList&p=D4E266DF4E3352B1&index=18

The source of 'Math'

This goes some time back into the past, when I suddenly felt the urge to see where Math starts, because logically, and this is something I remember was the base of proving stuff, you need to base yourself on something that is true to prove something else. Anybody who knows a little bit about this knows that this directly leads to Axioms, Occam's razor, Goedel and co...

Useless education

Funny we have been thinking we know our very basic math, but we really do not even know that.

Even the pythagorean theorem seems not logical looking at it this way. Looking at proofs, the proofs themselves either use geometric manipulation of squares, triangles making assertions about areas, and some of those proofs came from periods were an area was something intuitive and not really formalized, come to think of it the concept of area itself is pretty much elusive, and looking for the rigorous math definition leads you to Reimann and others, and that's pretty recent in history. What's more annoying, I made it through school and a Bachelor in Engineering and I never once heard of them. What is even more annoying, I felt I knew what an 'area' is although, if I had thought critically and logically, I would have came ot the conclusion that there is something elusive about it, just like I did recently.

All of this post comes after lots of going back to trying to understand the roots of math, using wikipedia and google, some of this are listed in the bottom of the post.

Proof of a proof

One nice idea from this quest is Goedel and his Incompleteness theorem, naively for me right now it means you need to start from something to make any proofs, and that something you started from cannot be proved. I will not go back and read the details, but while taking a shower just now, I became curious as to how Goedel proved this, did he use an axiom as a base, if this axiom was removed, not even this could be proven? This got me to think about what logic is, and about the 'axioms' of logic. Logic seems to be something the brain can very easily accept and use as a base. Again going back to Engineering, much of what is left is the logic. But why? and what is logic, isn't it absurd by itself? what is the logic that logic is based on? Why does the brain readily accept it? (without 'proof').

A group of 'things', excluding 'Neo, the source'

This got me to realize that there is a certain group of things, that all fall into some category for which I don't have a name: Logic (needing logic to make sense), time (continuous/discrete), infinity, zero (1 over infinity!), space and it's size both endless and not being absurd (same for time). All these things feel like one and the same, or belonging to one category. We end up accepting them and even using them, but few of us really grasp them.

Think versus. Grasp

I also remembered vaguely something that I think Einstein said about things a human brain will never grasp, comparing to a table with eyes looking down never being able to see what is above it (I am not sure about the exactness of any of this). But what I recently found interesting, is the fact that we are able to think about these things, even though we might not be able to understand them (by construction?), why this separation? why can't we only think about things we can understand? Does this boundary mean something? and what?

Dump and live on

I wrote this post mainly for one reason: get it off my brain to free it for thinking about more practical stuff.

Feel free to express your opinion about this at

http://forums.aigamedev.com/showthread.php?p=15004#post15004

Some of the references

http://www.mathacademy.com/pr/prime/articles/fta/index.asp?LEV=&TBM=&TAL=&TAN=&TBI=&TCA=&TCS=&TDI=&TEC=&TFO=&TGE=&TGR=&THI=&TNT=&TPH=&TST=&TTO=&TTR=&TAD=

http://www.mathacademy.com/pr/prime/articles/irr2/index.asp

http://www.google.de/search?q=proof+square+root+of+2+is+irrational&ie=utf-8&oe=utf-8&aq=t&rls=org.mozilla:en-US:official&client=firefox-a

http://en.wikipedia.org/wiki/Well-order

http://en.wikipedia.org/wiki/Infinite_descent

http://en.wikipedia.org/wiki/Square_root_of_2

http://en.wikipedia.org/wiki/Rational_number

http://eu.wiley.com/WileyCDA/WileyTitle/productCd-0470211520.html

http://en.wikipedia.org/wiki/Commensurability_(mathematics)

http://www.boost.org/doc/libs/1_37_0/libs/math/doc/sf_and_dist/html/math_toolkit/special/ellint/ellint_intro.html

http://en.wikipedia.org/wiki/Elliptic_integral

http://sci.tech-archive.net/Archive/sci.math/2006-09/msg04719.html

http://books.google.de/books?id=RM1D3mFw2u0C&pg=PA7&lpg=PA7&dq=%22rigorous+definition+of+area%22&source=bl&ots=jiarfVKaP5&sig=OAi9X-H7Hnp92BdfIuiIA911KSc&hl=en&ei=jJdXSomENIed_AahldSdCQ&sa=X&oi=book_result&ct=result&resnum=7

http://www.amazon.co.uk/gp/offer-listing/0133459438/ref=dp_olp_1?ie=UTF8&qid=1247256551&sr=8-1

http://www.amazon.com/gp/product/images/0486439461/ref=dp_image_0?ie=UTF8&n=283155&s=books

http://www.amazon.com/s/ref=nb_ss_b?url=search-alias%3Dstripbooks&field-keywords=Discrete+Mathematics&x=0&y=0

http://www.mathkb.com/Uwe/Forum.aspx/math/16463/Concept-of-measure-in-undergraduate-mathematics

http://www.google.de/search?hl=en&safe=off&client=firefox-a&rls=org.mozilla%3Aen-US%3Aofficial&hs=iW1&num=100&q=%22rigorous+definition+of+area%22&btnG=Search

http://www.youtube.com/results?search_query=The+Fundamental+Theorem+of+Calculus&search_type=&aq=f

http://www.youtube.com/watch?v=MOnnMlMM70Q&feature=PlayList&p=D4E266DF4E3352B1&index=18

Friday, June 26, 2009

A* / HPA* links and scribblings shared

There just was a question on the AiGameDev forums asking about A* (Astar), I remembered I had my own old links and scribblings somewhere so I shared them, so here they are if anybody needs them:

A* / HPA* links, references, implementation considerations:

http://docs.google.com/View?id=dcm3hb4r_30mjc2cj4j

A* basic theory scribblings:

http://jadnohra.net/release/AStar_Basic_Theory.pdf

If you have been following the blog you will see that I recently got into Reinforcement Learning and Dynamic Programming (see previous post), this gave me a much better overview about the 'essence' A* is and how it came to be, really just a case of applying dynamic programming.

The essence:

"Principle of Optimality: An optimal policy has the property that whatever the initial state and initial decision are, the remaining decisions must constitute an optimal policy with regard to the state resulting from the first decision." (written in 1957 by Bellman, a genius)

.

A* / HPA* links, references, implementation considerations:

http://docs.google.com/View?id=dcm3hb4r_30mjc2cj4j

A* basic theory scribblings:

http://jadnohra.net/release/AStar_Basic_Theory.pdf

If you have been following the blog you will see that I recently got into Reinforcement Learning and Dynamic Programming (see previous post), this gave me a much better overview about the 'essence' A* is and how it came to be, really just a case of applying dynamic programming.

The essence:

"Principle of Optimality: An optimal policy has the property that whatever the initial state and initial decision are, the remaining decisions must constitute an optimal policy with regard to the state resulting from the first decision." (written in 1957 by Bellman, a genius)

.

Sunday, June 21, 2009

Pure math versus game animation technology

Today was yet another enlightening day, the game developer part of me likes to call those days "Level-up" days, if you look at my AiGameDev forum posts, my twits on twitter, the discussions we have on #gameai IRC channel, my emails with Mathematicians I have never met, you could see the conext of today's enlightment, but here is the full story:

Questionable game animation technology research

Working on the AIGameDev animation system with Alex Champandard, we reached a rather experimental (for now) stage of wanting to use Reinforcement learning to learn the heuristic of an A* planner used for planning locomotion based on a given, non-annotated, step based, automatically generated motion graph.

The motivation of doing this might be questionable, but the whole thing developed step by step.

In summary we had the motion graph builders, written by Alex, he also had the brilliant idea of making them step based, using very sensible points in time to make animation transitions, we had the generic A* code I have written, we wanted to combine the two to have something moving on the screen, showing the usefulness of this foot-skate free motion graph approach.

This gave birth to the A* motion planner, A planner that is not very suitable for multiple characters in real time, a planner that only needs a motion graph, and no extra code in form of hand written controllers, that gets you from A to B in a fast, high transition quality way.

It was not very good at reaching the destination with an orientation constraint, it was possible, but it made the heuristic too complicated, almost like writing a manual controller, and much

slower. In theory, this should be very easy, or at least easily learnable.

Reinforcement Learning

There are papers that use RL for motion planning, pretty recent ones (starting 2007), like "Real-Time Planning for Parametrized Human Motion" or "Near Optimal Character Animation with Continuous Control", I mention those two because of I have them printed on my running-out-of-space desk, along with countless "Gleicher"/"Kovar" papers about animation and motion graphs. It was Alex who drove me into all of this, so any straying is both his fault, and also his merit, I am grateful, I have long been searching for something interesting, some of my previous jobs failed big there.

The idea was to learn the heuristic, making the planner suitable for real time, this might be questionable for some people, the industry mostly drives it's animations, no need for planning at all, simple, effective, KISS, and full of foot-skate.

At the AiGameDev conference in Paris this month, there was one panel where we discussed the uncanny valley, the need or no-need to cross it, I also discussed this afterwards with Chrisitiaan Moleman and Markus Mohr, Remco Straatman and others, I mention those because I was extremely pleasantly surprised about how passionate and nice those people and everybody else who was at the conference are, I am not getting tired of repeating this because it was remarkable.

So to go back, the motivation, is by all means questionable, except if we decide that we want to see how a next step in animation would look like, I am not saying we should be planning the whole time, there will be times for driving the animations and times for planning, but I'm keeping this under wraps for the moment, although it's not very difficult to imagine what I mean.

Applying Reinforcement Learning

So all of this got me into Reinforcement Learning, a classical reference is the Sutton/Barto book "Reinforcement Learning, an Introduction" available online for free. I also bought a couple of related books. I read the parts of online book I needed the 1st time and designed an RL approach to learning what we needed to learn to help the A* heuristic, the result was that it basically 'worked' but was a naive first take that needed refinement to be really useful, the details will be available to look at in the AiGamDev sandbox at some point. At this stage I had read parts of the online book some in more detailed than other, and I would say I had revisited some parts 3 or 4 times. I was at the stage where I understood what we need, make a first attempt, understood the problem much better because of it, and was ready to design the second take, which needed a technique that is a bit more evolved that the 1st technique I had used.

Reading in detail and understanding the Blues

I decided, that I do not feel like coming back to the basics anymore, and to read the full book in maximum detail, all while writing a take-away and never look back. This alone was very enlightening, there were many subtle issues that at the level of detail that I had read until then, blurred together and looked like one simple thing, I like to compare this with music (being an ex-quarter-musician). When you listen to Progressive Metal, Jazz, Blues the first time, and you listen to one second, then the second, you feel that they are the same thing ... it's all the same, and this is true, they are, and then fun is in the differences and nuances, which are more detailed and intricate than in other styles, in blues you have a small selection of rhythmic and chord patterns that it looks extremely limiting at first sight, it turns out, there is such a huge richness to express thanks to this limitation, it sets the context and allows you to play with the rest.

In a way I also find this related to trying to write solutions that are extremely general, I like generalizations, I like unified theories of everything (I hope the theoretical physicists will find a unifying theory that will allow us to do now cool practical stuff before I seize to exist, as an alternative, I hope they scientists find a way to escape immortality, another alternative is vampires really existing and after reading my wish here visiting me), I like being 'lazy' and writing code that will be reused by me and others in many different contexts without needing adapt it, but it turns out, in practice KISS is the way to go, just like Blues setting the limits, it is very important to set your own limits when choosing your problem, per example when designing your next AI, physics, graphics or gameplay technology. It is not easy, because what ends up limiting you are your computational resources and maybe the talent of your team, both are difficult if not impossible to measure for a designer.

So, I decided I wanted to give the book the full detail treatment, all with a take away that I am sharing online at: http://docs.google.com/View?id=dcm3hb4r_19gkzkdbdr.

So I started reading and thinking iteratively, taking breaks to think away from my desk to let things solidify. All looked good until I reached http://www.cs.ualberta.ca/~sutton/book/ebook/node34.html, Equation 3.10 line 3 to 4. Intuitively and logically, this made complete sense, but I felt the way this was written was trying to tell me that there is rigorous math derivation at work here, I asked 2 people by email about this, those were people I had met by accident on twitter, one of them very knowledgeable about Math, the other about AI, I also wrote at the AiGameDev forums, that was yesterday, I received a few replies, but I was not satisfied, eventually Alex took the time to discuss it with me and we agreed that there was no rigouros math involved, it was an mathematical expression of the backup diagram.

One additional help was the way this was presented in "http://paginas.fe.up.pt/~eol/schaefer/diplom/ReinforcementLearning.htm" saying:

This was our conclusion although I was expecting I would find a step by step expansion based on Mathematical rules and RL definitions, maybe using the rules of Linearity in Expectation and Iterated Expectation, applied to the RL definitions of environment models.

Rigorousness, a waste of time

In general I am very skeptical in accepting things without asking lots of 'why's.

I also have been getting a bit into rigorous math, because I see this as one of the current barrier standing between mean and world domination (ooops now everybody knows). I am trying to put a bit of time into improving and updating my math skills because it is a fact, that some academic papers or writing, no matter how useful like to use cryptic (for me currently) equations which could be expressed in a much nicer way in English. This is a long topic by itself, some months ago I was wondering that in order to prove something rigorously, you need to base it on another thing, but obviously, I thought, it must start somewhere, I set myself to search for that something in math, I found something probably obvious to all mathematicians, you need axioms to start with, you don't prove axioms. Now I know this from school, but I never thought of it this way, this journey took me many places mostly on wikipedia ending at the "There Ain't No Such Thing As A Free Lunch" theorem and it's sibling 'Full employment' which I thought was quite amazing and increased my new found love to going back to Math, one can actually logicall prove that computer scienetists will never run out of jobs, well done Math!

Enlightenment, I am not alone

Alex was of the opinion that I am taking it too far again and that this is not useful, but I was able to partially convince of the usefulness because it would make many more papers and academic writing accessible to me. Anyway, I kept digging, this time into the base of the equations I wanted to detail, the Bellman Equations, which led me to this document: http://www.wu.ac.at/usr/h99c/h9951826/bellman_dynprog.pdf, this is the main topic of this post, why? because this genius named Richard Bellman, who writes "At Stanford I had a chance to do analytic number theory, which I had wanted to do since I was sixteen." !! touched on many of the topics I am worried about and that I described here and constantly trying to understand better, it was extremely enlightening, Level up, here are some quotes and their relation to this post:

A lot of work and time goes into what becomes a one line 'obvious' equation, it was not always obvious! not even to the person that came up with it, treating it as obvious is almost a crime. However I also understand that there is the danger of tying one's legs to the chair if one wants to do this for every tiny bit of theory, not getting any new progress done is a crime as well, balance is key, as usual. This exact dilemma is what started me on today's enlightenment Journey, and again Bellman touches on that :), using a writing style 100x superior to mine of course, but the idea is there ...

Conclusion

Level up.

.

Questionable game animation technology research

Working on the AIGameDev animation system with Alex Champandard, we reached a rather experimental (for now) stage of wanting to use Reinforcement learning to learn the heuristic of an A* planner used for planning locomotion based on a given, non-annotated, step based, automatically generated motion graph.

The motivation of doing this might be questionable, but the whole thing developed step by step.

In summary we had the motion graph builders, written by Alex, he also had the brilliant idea of making them step based, using very sensible points in time to make animation transitions, we had the generic A* code I have written, we wanted to combine the two to have something moving on the screen, showing the usefulness of this foot-skate free motion graph approach.

This gave birth to the A* motion planner, A planner that is not very suitable for multiple characters in real time, a planner that only needs a motion graph, and no extra code in form of hand written controllers, that gets you from A to B in a fast, high transition quality way.

It was not very good at reaching the destination with an orientation constraint, it was possible, but it made the heuristic too complicated, almost like writing a manual controller, and much

slower. In theory, this should be very easy, or at least easily learnable.

Reinforcement Learning

There are papers that use RL for motion planning, pretty recent ones (starting 2007), like "Real-Time Planning for Parametrized Human Motion" or "Near Optimal Character Animation with Continuous Control", I mention those two because of I have them printed on my running-out-of-space desk, along with countless "Gleicher"/"Kovar" papers about animation and motion graphs. It was Alex who drove me into all of this, so any straying is both his fault, and also his merit, I am grateful, I have long been searching for something interesting, some of my previous jobs failed big there.

The idea was to learn the heuristic, making the planner suitable for real time, this might be questionable for some people, the industry mostly drives it's animations, no need for planning at all, simple, effective, KISS, and full of foot-skate.

At the AiGameDev conference in Paris this month, there was one panel where we discussed the uncanny valley, the need or no-need to cross it, I also discussed this afterwards with Chrisitiaan Moleman and Markus Mohr, Remco Straatman and others, I mention those because I was extremely pleasantly surprised about how passionate and nice those people and everybody else who was at the conference are, I am not getting tired of repeating this because it was remarkable.

So to go back, the motivation, is by all means questionable, except if we decide that we want to see how a next step in animation would look like, I am not saying we should be planning the whole time, there will be times for driving the animations and times for planning, but I'm keeping this under wraps for the moment, although it's not very difficult to imagine what I mean.

Applying Reinforcement Learning

So all of this got me into Reinforcement Learning, a classical reference is the Sutton/Barto book "Reinforcement Learning, an Introduction" available online for free. I also bought a couple of related books. I read the parts of online book I needed the 1st time and designed an RL approach to learning what we needed to learn to help the A* heuristic, the result was that it basically 'worked' but was a naive first take that needed refinement to be really useful, the details will be available to look at in the AiGamDev sandbox at some point. At this stage I had read parts of the online book some in more detailed than other, and I would say I had revisited some parts 3 or 4 times. I was at the stage where I understood what we need, make a first attempt, understood the problem much better because of it, and was ready to design the second take, which needed a technique that is a bit more evolved that the 1st technique I had used.

Reading in detail and understanding the Blues

I decided, that I do not feel like coming back to the basics anymore, and to read the full book in maximum detail, all while writing a take-away and never look back. This alone was very enlightening, there were many subtle issues that at the level of detail that I had read until then, blurred together and looked like one simple thing, I like to compare this with music (being an ex-quarter-musician). When you listen to Progressive Metal, Jazz, Blues the first time, and you listen to one second, then the second, you feel that they are the same thing ... it's all the same, and this is true, they are, and then fun is in the differences and nuances, which are more detailed and intricate than in other styles, in blues you have a small selection of rhythmic and chord patterns that it looks extremely limiting at first sight, it turns out, there is such a huge richness to express thanks to this limitation, it sets the context and allows you to play with the rest.

In a way I also find this related to trying to write solutions that are extremely general, I like generalizations, I like unified theories of everything (I hope the theoretical physicists will find a unifying theory that will allow us to do now cool practical stuff before I seize to exist, as an alternative, I hope they scientists find a way to escape immortality, another alternative is vampires really existing and after reading my wish here visiting me), I like being 'lazy' and writing code that will be reused by me and others in many different contexts without needing adapt it, but it turns out, in practice KISS is the way to go, just like Blues setting the limits, it is very important to set your own limits when choosing your problem, per example when designing your next AI, physics, graphics or gameplay technology. It is not easy, because what ends up limiting you are your computational resources and maybe the talent of your team, both are difficult if not impossible to measure for a designer.

So, I decided I wanted to give the book the full detail treatment, all with a take away that I am sharing online at: http://docs.google.com/View?id=dcm3hb4r_19gkzkdbdr.

So I started reading and thinking iteratively, taking breaks to think away from my desk to let things solidify. All looked good until I reached http://www.cs.ualberta.ca/~sutton/book/ebook/node34.html, Equation 3.10 line 3 to 4. Intuitively and logically, this made complete sense, but I felt the way this was written was trying to tell me that there is rigorous math derivation at work here, I asked 2 people by email about this, those were people I had met by accident on twitter, one of them very knowledgeable about Math, the other about AI, I also wrote at the AiGameDev forums, that was yesterday, I received a few replies, but I was not satisfied, eventually Alex took the time to discuss it with me and we agreed that there was no rigouros math involved, it was an mathematical expression of the backup diagram.

One additional help was the way this was presented in "http://paginas.fe.up.pt/~eol/schaefer/diplom/ReinforcementLearning.htm" saying:

The diagram shows that when initially in state s action a is selected, the successor state is s1 and reward r1 is expected but also r2 is expected passing to s2. If in state s action b is chosen, reward r3 is expected and leads to s1 but also reward r4 is expected and leads to state s2.

This diagram can be described by the following Bellman-Equation: ...

This was our conclusion although I was expecting I would find a step by step expansion based on Mathematical rules and RL definitions, maybe using the rules of Linearity in Expectation and Iterated Expectation, applied to the RL definitions of environment models.

Rigorousness, a waste of time

In general I am very skeptical in accepting things without asking lots of 'why's.

I also have been getting a bit into rigorous math, because I see this as one of the current barrier standing between mean and world domination (ooops now everybody knows). I am trying to put a bit of time into improving and updating my math skills because it is a fact, that some academic papers or writing, no matter how useful like to use cryptic (for me currently) equations which could be expressed in a much nicer way in English. This is a long topic by itself, some months ago I was wondering that in order to prove something rigorously, you need to base it on another thing, but obviously, I thought, it must start somewhere, I set myself to search for that something in math, I found something probably obvious to all mathematicians, you need axioms to start with, you don't prove axioms. Now I know this from school, but I never thought of it this way, this journey took me many places mostly on wikipedia ending at the "There Ain't No Such Thing As A Free Lunch" theorem and it's sibling 'Full employment' which I thought was quite amazing and increased my new found love to going back to Math, one can actually logicall prove that computer scienetists will never run out of jobs, well done Math!

Enlightenment, I am not alone

Alex was of the opinion that I am taking it too far again and that this is not useful, but I was able to partially convince of the usefulness because it would make many more papers and academic writing accessible to me. Anyway, I kept digging, this time into the base of the equations I wanted to detail, the Bellman Equations, which led me to this document: http://www.wu.ac.at/usr/h99c/h9951826/bellman_dynprog.pdf, this is the main topic of this post, why? because this genius named Richard Bellman, who writes "At Stanford I had a chance to do analytic number theory, which I had wanted to do since I was sixteen." !! touched on many of the topics I am worried about and that I described here and constantly trying to understand better, it was extremely enlightening, Level up, here are some quotes and their relation to this post:

“An interesting question is, ‘Where did the name,This is related to the desire of people to research things, and that in the end come up with useful things because of that, despite it seeming pointless to the 'management'. Is it pointless looking into RL for locomotion?

dynamic programming, come from?’ The 1950s were not

good years for mathematical research. We had a very interesting

gentleman in Washington named Wilson. He was

Secretary of Defense, and he actually had a pathological

fear and hatred of the word, research. I’m not using the

term lightly; I’m using it precisely. His face would suffuse,

he would turn red, and he would get violent if people used

the term, research, in his presence."

"Let’s take a word that has anThere are many ways to present the same idea, it is ok to choose the one that fits the target. This is not directly related and not new, but it shows that even something as cool as RL needed this to start off.

absolutely precise meaning, namely dynamic, in the classical

physical sense. It also has a very interesting property

as an adjective, and that is it’s impossible to use the word,

dynamic, in a pejorative sense. Try thinking of some combination

that will possibly give it a pejorative meaning.

It’s impossible. Thus, I thought dynamic programming was

a good name. It was something not even a Congressman

could object to. So I used it as an umbrella for my activities”

“I could either be a traditional intellectual, or a modernThat's also one of my ongoing concerns that no one else seems to worry about, finding the right balance, and even Bellman used to think about it.

intellectual using the results of my research for the

problems of contemporary society. This was a dangerous

path. Either I could do too much research and too little

application, or too little research and too much application."

“My first task in dynamic programming was to put it onAha, so that's where it all comes from! intuition! and not equation 3.10 with cryptic expansion steps. Relieving...

a rigorous basis. I found that I was using the same technique

over and over again to derive a functional equation.

I decided to call this technique “The principle of optimality.”

Oliver Gross said one day, ‘The principle is not rigorous.’

I replied, ‘Of course not. It’s not even precise.’ A good

principle should guide the intuition."

"This isCoding-wize, I tended to be on the 'ties a chair to his legs' type but that was long time ago, and it actually is useful to be in this state for a limited amount of time, checking out the possible extremes is always good, even Buddha checked the extremes before finding the golden middle, it is only logical, how can you know where the middle is if you have never seen where the extremes are! I am therefore happy I have been at both extremes and have developed a good eye for the Golden middle, not only in code.

pertinent to a comment made by Felix Klein, the great

German mathematician, concerning a certain type of mathematician.

When this individual discovers that he can jump

across a stream, he returns to the other side, ties a chair

to his leg, and sees if he can still jump across the stream.

Some may enjoy this sport; others, like myself, may feel

that it is more fun to see if you can jump across bigger

streams, or at least different ones"

“What is worth noting about the foregoing developmentI found this one great as well, equations are thrown at us in lectures, in papers, in tutorials, and we are supposed to just say yes it makes sense, this is usually not enough for me, I prefer to be able to get into the context which allowed the 'inventor' to come up with those ideas, based on what he knew, the problem he faced, and how he thought to come up with what he came up with.

is that I should have seen the application of dynamic programming

to control theory several years before. I should

have, but I didn’t. It is very well to start a lecture by saying,

‘Clearly, a control process can be regarded as a multistage

decision process in which... ,’ but it is a bit misleading.

Scientific developments can always be made logical and

rational with sufficient hindsight. It is amazing, however,

how clouded the crystal ball looks beforehand. We all wear

such intellectual blinders and make such inexplicable blunders

that it is amazing that any progress is made at all."

A lot of work and time goes into what becomes a one line 'obvious' equation, it was not always obvious! not even to the person that came up with it, treating it as obvious is almost a crime. However I also understand that there is the danger of tying one's legs to the chair if one wants to do this for every tiny bit of theory, not getting any new progress done is a crime as well, balance is key, as usual. This exact dilemma is what started me on today's enlightenment Journey, and again Bellman touches on that :), using a writing style 100x superior to mine of course, but the idea is there ...

"All this contributes to the misleading nature of conventional

history, whether it be analysis of a scientific discovery

or of a political movement. We are always looking at

the situation from the wrong side, when events have already

been frozen in time. Since we know what happened, it is

not too difficult to present convincing arguments to justify a

particular course of events. None of these analyses must be

taken too seriously, no more than Monday morning quarterbacking."

Conclusion

Level up.

.

Subscribe to:

Posts (Atom)