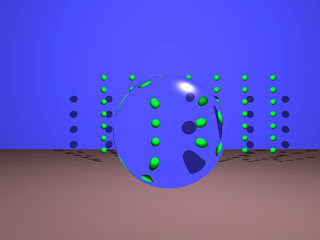

In the last 3 days all the work has been on optimization, below is a summary of changes and the speedups they produces (speedup = old render time / new render time)

- Changing intersector from Trumbore/Moller to Optimized Wald Projection -> 0.25

- 2x2 Packet Tracing (SSE/SSE2 using SIMD intrinsics) -> 3.0-4.0

- Including ordering information into traversal (do not traverse BVH box if intersection was found in previous boxes and t intersection <> 1.8, 2x2-> 1.3

- SIMDifying primary ray packet generation (instead of looping to generate the 4 rays) -> 1.05

- Changing BVH traversal from recursive to iterative: Mono->1.04, 2x2->soon

- Changing BVH memory allocation scheme to improve traversal cache friendliness: soon

- Chancing BVH build algorithm (to SAH?): soon

I am also planning to have a look at gcc's profile guided optimization. Acovea seems to be a fun thing to try as well.

Talking about low level optimization, I recently read Randall Hyde's Write Great Code-Vol I, and reading Vol II, which are nice as refreshers on assembly and low level optimization as well as nice summaries of what modern compilers do and more importantly do not do although one might expect it.

The publicly available Software Optimization Guide for AMD64 Processors is also similar in a way, in that it provides a list of do's and dont's for the mentioned processors, but many things apply in general as well, the nice thing is that there is an example for each case.

Another fun thing is superoptimizers, and the fact that a perfect super optimization is actually a NP-Hard problem and that is of course not a surprise, the interesting thing about it is that actually most if not all fun games are also NP-Hard, since as soon as a game is found not to be NP-Hard it is considered 'solved', and it not really fun to play when one knows the solution,

this might explain why so many of us are attracted to optimization because it is actually NP-Hard (fun!). The same goes for AI and other game related technologies.

Basically all we are trying to do is to surf on the edge of the current technology and try to push the most out of it by using algorithms that were not possible before but are almost possible now if optimized.